|

I have been hearing for some time, discourse about the ills AI will wrought on patients and consumers if it is used in the healthcare context. Most of the fear or scepticism comes from a good place with an intent to ensure that AI is safe and efficient when used in healthcare. However, sometimes you come across fearmongering, gatekeeping, and reality-denying statements that make you shake your head in dismay and question the motives of these statements. Please don't get me wrong, my work over the past many years has been devoted to safe and effective, aka translatable, AI in healthcare as evidenced in my publications and projects. I am all for constructive discourse and work that brings about the appropriate and safe adoption of AI in healthcare. However, in a field (i.e. healthcare) that has fallen way behind other sectors in the adoption of AI, irrational fearmongering (if not doomsaying) in the garb of ethics, patient safety, and regulation is of no benefit to anyone, especially for the most critical population in healthcare i.e. the patients.

There are two famous cases that AI (in healthcare) critics cite to indicate the harm AI will wreak on patients and consumers of healthcare (yes, just two notable ones in over 40 years of the use of AI in medicine). One is from some years back and the other is a recent case. Let us review both to understand if it was AI intrinsically at fault or if it was the way the AI technology was constructed (by humans) and used (read "human oversight" and "policy") that was at fault. In this famous and widely cited study from 2019, the authors identified that an algorithm that was widely being used in US hospitals was discriminating against African Americans keeping them away from much-needed personalised care. I will allow you to read the study and its findings, which I am not disputing. What I am critiquing is how the findings are being used by a minority to demonise AI. Here are the main arguments, for why this interpretation is shallow and of a poor understanding of the context. 1. The algorithm was trained to predict future healthcare costs, not directly predict health needs. Using cost as a proxy for health needs is what introduced racial bias because less money is spent on Black patients with the same level of illness. The algorithm was accurately predicting costs, just not illness. 2. When the algorithms were trained to directly predict measures of health instead of costs, such as the number of active chronic conditions, the racial disparities in predictions were greatly reduced. This suggests the algorithm methodology itself was sound, but the choice to predict costs rather than health was problematic. 3. The algorithm excluded race from its features and predictions. Racial bias arose due to differences in costs by race conditional on health needs. So, race was not directly encoded, but indirect effects introduced bias. 4. The study also notes that doctors, when using the algorithm's output, do redress some of the bias, but not to the extent that would eliminate the disparities. This suggests that the way healthcare professionals interpret and act on the algorithm's predictions also contributes to the outcome. 5. The authors note this approach of predicting future costs as a proxy for health needs is very common and used by non-profit hospitals, academics, and government agencies. So, it seems to be an industry-wide issue, not something unique to this manufacturer's methodology. 6. When the authors collaborated with the manufacturer to change the algorithm's label to an index of both predicted costs and predicted health needs, it greatly reduced bias. This suggests the algorithm can predict health accurately when properly configured. As an overview, key limitations seem to have been in the problem formulation and real-world application of the algorithm to guide decisions, not in fundamental deficiencies in the algorithm itself or the manufacturer's approach. The issues introduced racial disparities, but an algorithm tuned to directly predict health could avoid such disparities. Now, let us review a more recent case, which is generating headlines and a lawsuit. In this instance, UnitedHealthcare, which is the largest health insurance company in the US, has been alleged to have used a faulty algorithm to deny critical care for elderly patients. The lawsuit against the group alleges that elderly patients were prematurely removed from rehabilitation and care centres based on the algorithm's recommendation while overriding physician advice. Now, I will allow you to read the details in this well-written piece. If indeed, the information being presented is confirmed, it is an unfortunate and shocking episode. However, returning to the crux of my argument: is AI as a tool the key driver behind the misfortunate episode or how it was constructed and is used by the organisation the real issue? Now reviewing the details available from the media, these aspects become obvious. 1. The AI tool is claimed to have a very high error rate of around 90%, incorrectly deeming patients as not needing further care. However, an AI with proper training and validation should not have such an egregiously high error rate if accurately predicting patient needs. 2. UnitedHealth Group set utilization management policies that seem to provide incentives to deny claims and limit care. The AI tool may simply be enforcing those harsh policies rather than independently deciding care needs. 3. Based on the available information, there are not enough details provided on how the AI was developed, trained, and validated before being deployed in real healthcare decisions (the organisation has not provided access to the algorithm to the media). Proper processes may not have been followed, leading to poor performance. 4. The federal lawsuits note UnitedHealth Group did not ensure accuracy or remove bias before deploying the tool. This lack of due diligence introduces preventable errors. No AI exists in a vacuum - it requires ongoing human monitoring and course correction. If the high denial rates were noticed, why was it not fixed or discontinued? The lack of accountability enables problems to persist. While further technical details on the AI system itself would be needed to thoroughly assess it, the high denial rates, perverse financial incentives, lack of validation procedures, and lack of human accountability strongly indicate issues with the overall organization, policies, and deployment - not necessarily flaws with AI technology itself. I often in my presentations when discussing AI safety in healthcare state "AI is a tool, don't award it sentience and autonomy when it is not technically capable of so. Even if it were to assume these capabilities, it still should be under a proper governance framework". Now let us consider both above stated cases and consider where the key issue is. As with most new technologies, the humans who implement them bear responsibility for doing so properly and ethically. In these cases, it's important to assess whether the AI was designed with a specific objective that it is accurately meeting, but that objective or the data it's based on might be leading to unintended consequences. For example, if an AI is programmed to optimize for cost savings, it might do so effectively, but this could inadvertently lead to denying necessary care. If the AI is trained on historical data that contains biases or reflects systemic inequalities, the algorithm might perpetuate or amplify these issues. The problem, in this case, would be the data and the historical context it represents, not the algorithm itself. Also, the way an AI is used within an organizational structure can significantly affect its impact. If policies or management decisions lead to an over-reliance on AI without sufficient human oversight or if the AI's recommendations are used without considering individual patient needs, the issue would be with the organizational practices rather than the AI. While the AI might be functioning as designed, the broader context in which it is deployed – including the data it's trained on, the policies guiding its use, and the human decisions made based on its output – could be contributing to any negative outcomes. These factors should be carefully examined and addressed to mitigate any harm and ensure that AI tools are used responsibly and ethically in healthcare settings. I have over the years, along with colleagues, anticipating such issues, laid out guidance as indicated here, here,here and here. Like many well-intentioned critics of AI, I do acknowledge the limitations of AI and the harm, AI can wrought when it is used in an unsafe manner. However, shunning AI, for what are faults that are obviously not its doing, and doomsaying, is like blaming the vehicle for a road traffic accident even when the human driver is clearly at fault. Speaking of vehicles, another thing please don't continue to use the Tesla autopilot mishap that occurred in 2019 as an example of the ills AI will wrought in healthcare, it is lazy and not of context to healthcare. In any case, a jury has ruled the Tesla autopilot was not at fault for the fatal crash. "For a better, more accessible and safer healthcare system with active input from AI"-Sandeep Reddy

0 Comments

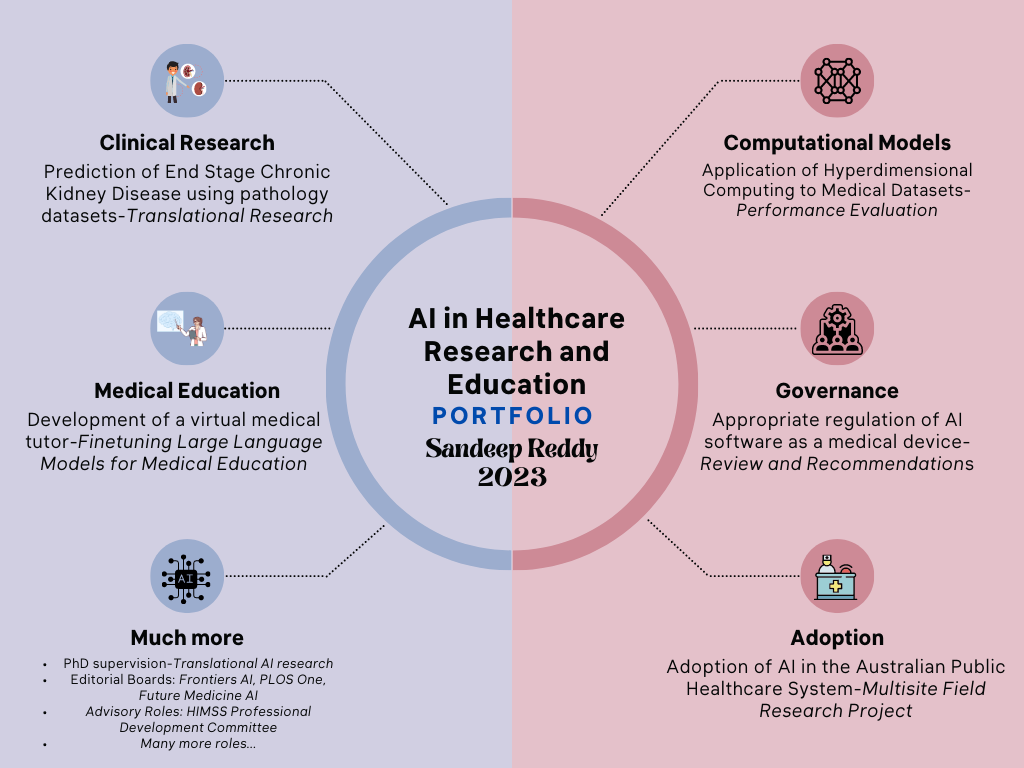

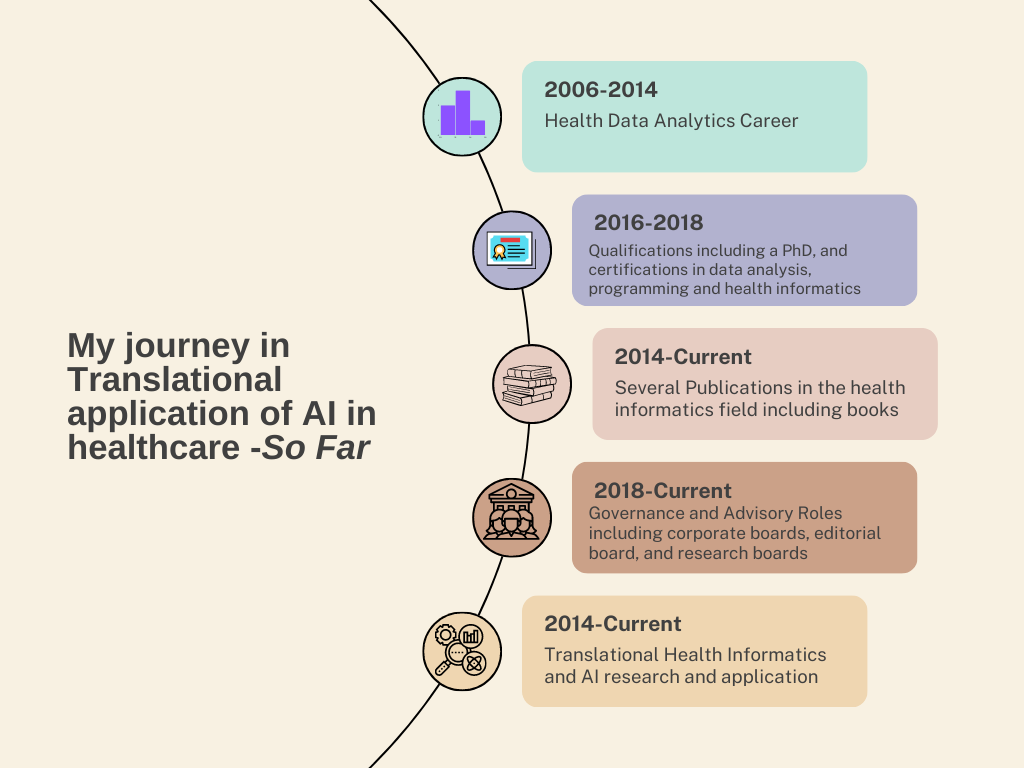

Advancements in Artificial Intelligence: Navigating the Horizon of Development and Manufacturing6/10/2023  Introduction Artificial Intelligence has rapidly advanced in recent years and has the potential to revolutionize various industries. One of the key areas in which AI is expected to make significant progress in the future is the development of anthropomorphic robots. Anthropomorphic robots are designed to resemble and simulate human behaviour, both physically and intellectually. humans in a more natural and intuitive manner, making them useful in areas such as healthcare, customer service, and entertainment. Another important area of future development in AI is diffuse intelligent systems. Diffuse intelligent systems refer to AI systems that are distributed across multiple devices or networks, allowing them to collaborate and communicate seamlessly. These trends in AI development have implications for various industries and sectors, and it is essential to explore their potential and challenges. Understanding Anthropomorphic Robots Anthropomorphic design is emerging as a key trend in social robotics, with humanoid robots able to engage users through lifelike physical forms and behaviours. Anthropomorphic robots, also known as humanoid robots, are designed to mimic human appearance and behaviour. Anthropomorphic elements like faces, speech, and body language cues leverage humans’ innate social instincts and expectations when interacting with machines. Anthropomorphism plays a crucial role in the development of humanoid robots that are expected to emerge more prominently. Their ability to resemble humans both physically and behaviourally makes them well-suited for general-purpose assistance tasks within human environments. Humanoid robots are specifically designed to serve humans due to their anthropomorphic structure, friendly design, and ability to navigate various environments. There is a societal need for these robots to perform tasks such as service assistance and entertainment while interacting with both people and adaptable surroundings. Anthropomorphism plays a crucial role in creating smart objects that can be perceived by humans as cognitively and emotionally human-like. The field of human-robot interaction aims to develop robots and AI software that can easily be anthropomorphized because humans have an inherent tendency towards anthropomorphism. By focusing on developing humanoid smart objects resembling humans, we increase their acceptance among users. As demands grow for humanoid robot applications that are suitable within living environments or able to assist with everyday tasks, the development of several humanoid assistant general-purpose robots has become exceedingly important. Extending the concept, companies can develop conversational AI that can be embedded in humanoid robot platforms. This allows for more natural dialogue, reading of nonverbal signals, and contextual understanding. Anthropomorphic robots have already proven useful in various applications such as assisting children with autism, interacting with elderly individuals, and providing guidance to museum visitors. With the increasing focus on anthropomorphic robots in both scientific, technical, and socio-economic fields, there is a growing demand for the development of robotic human limb prostheses and other human-like electromechanical devices. It is worth noting that the application of artificial intelligence in intelligent prosthetics, smart cognitive control systems, and rehabilitation of disabled individuals has been considered in the strategy for AI development in the Healthcare sector in certain countries. However, highly human-like robot’s risk falling into the “uncanny valley” where subtle flaws create unease. Designers must balance humanoid elements with obvious mechanical reminders. When deployed carefully, anthropomorphism can make interactions with robots feel more intuitive, empathetic, and human. Introduction to Diffuse Intelligent Systems In addition to anthropomorphic robots, another future trend in the development of AI is the emergence of Diffuse Intelligent Systems*. This technological advancement holds immense potential for addressing intricate challenges and optimizing processes across a wide range of domains. Diffuse Intelligent Systems (DIS) refer to artificial intelligence that is distributed across multiple devices and platforms, operating largely invisibly in the background to drive optimizations and efficiencies. DIS leverage cloud computing, edge computing, and distributed ledger technologies to coordinate large networks of intelligent agents. These agents gather data, share insights, and make decentralized decisions to enact changes across infrastructure, applications, and end-user experiences. A key advantage of DIS is their ability to operate at scale across heterogeneous environments, adapting dynamically to changing conditions. Through emergent intelligence, DIS aim to provide ambient optimized intelligence across digital ecosystems.  To realize the potential of DIS, key technical challenges remain. Secure protocols are needed to enforce privacy and access controls across distributed agents. New decentralized learning techniques must be developed to synthesize insights from diverse data silos and standardized interfaces are required for agents to coordinate actions on legacy systems. If these challenges can be met, DIS promise more responsive, resilient, and frictionless intelligent automation across all facets of digital life. The next decade may see DIS transform how intelligent systems are built, deployed, and experienced. *A term I coined to describe this process/phenomenon I am often approached by clinicians, healthcare managers, and many others with a non-engineering/computer science background as to how to get started with an applied AI role in healthcare. To the disappointment of many, my advice is not very palatable as it presents many intricate steps to get one established in this area. Unfortunately, there is no quick and easy path to achieving your goals in this field. It involves hard work, but importantly, focusing on key areas to ensure you are on the right track. However, if one was clearly determined and willing to put in the necessary efforts, the benefits would be multifold. Let me relate my journey, it may help add perspective to the advice I provide. Since the start of my professional career, I have interacted with and used various datasets and analytical techniques to inform my work and that of my employer organizations. Data included medical, public health, census, hospital performance, and financial information. I have used SPSS, SAS, Power BI, Excel, GIS, Tableau…etc. for analyzing and presenting the data in my various roles, especially when I was working for governmental agencies, across New Zealand, Denmark, and Australia. However, it was during the days of my PhD study that I came across machine learning as a powerful analytical tool. I was developing a statistical correlational model linking emergency department performance to that of hospital funding for my PhD study. Although I ended up using a simple linear equation and trend analysis for my statistical model, my interest in machine learning persisted. A few years later, having completed my PhD, I approached some established AI researchers and machine learning engineers, to seek advice on establishing oneself in this area. However, none seemed interested in offering time or advice. I wouldn’t entirely blame them, as my profile (a medical doctor with a public health medicine/healthcare management career) did not seem to align with their idea of an AI practitioner. However, what was not obvious to them was my experience and interest in data analysis and my determination to establish myself in this area. The first thing I did was spend considerable time reading machine learning and AI books (I must have spent over a thousand dollars in that period stocking up my library with such books), followed by enrollment in machine learning/data analysis courses (online and on-site). I also separately started training in Python programming (I never felt inclined to learn R) and practised what I learned by building simple machine learning models (the classic image classification exercises J). It was now the time to consider how you could apply what I learned in medicine/healthcare. I quickly realized that AI as a technology does not operate isolated in healthcare, it must be part of a digital ecosystem. So, this meant I had to be across details of health IT. Fortunately, in Australia, I had a pathway to be certified in Health IT, i.e., Certified Health Informatician Australasia (CHIA). Preparing for the certification, meant reading up on all aspects of health IT theory and implementation, followed by a vigorous exam. When I did get certified, it gave me a significant morale boost to progress to the next steps to establish myself in this field. It is often said that the best way to educate oneself is to write. In my case, this is very accurate. I committed myself to writing not only to educate myself but also others. I was very fortunate to be working in an environment/role that allowed me to pledge to this endeavour. Likewise, I started my publication in this field with a book chapter about AI and its application in healthcare, which has since been followed by three books, four book chapters, thirty or so articles (peer-reviewed and non-peer-reviewed), and obviously, many more to come. Writing gets you to be critical of not just your topic, but also your understanding of the topic, especially when it goes through a peer review process. This is why I prioritize publication in journals, as the peer review process adds vigour and credibility to your writing outputs. During the early period of my AI career, I encountered resistance and unwillingness from various others to engage in AI projects. I had noted, through my research, that there were many areas in healthcare that would benefit from AI applications. As they say, if you can’t get the mountain to come to you, you must go to the mountain. In this instance, to fulfil my AI aspirations and obtain experience implementing AI projects in medicine, I established my own AI focused company. Since then, I have had the fortune of other like-minded individuals joining the company. Involvement in the company and several AI projects meant I obtained invaluable hands-on experience developing and deploying AI models. Now, I only play a governance role with the company, but involvement in an industry role brings you expertise and experience that pure research roles may not. As time passed, the momentum built through my earlier steps positively impacted my AI research/academic side I now engage in the entire continuum of the AI pathway-from development to deployment of models to policy and governance. This is nicely captured in my current academic projects (as of August 2023).  By no means, I have attained my peak as an AI in healthcare practitioner, and as Robert Frost put it, I “have promises to keep and miles to go before I sleep” but I feel a certain momentum or foundation has been established based on my two decades of data analysis and approximately a decade of dedicated AI work, that I see a clearer path to my involvement in AI in healthcare. I am certainly optimistic about the prospects of AI in medicine/healthcare and feel that we will lose a significant opportunity to address healthcare’s intractable problems if we ignore or do not adopt AI technology. As I look back on my journey and small accomplishments so far in this field (below illustration), I feel that I have a role to play in the coming years to enable this adoption, and I hope to find collaborators to join me in this journey. In the past many years, having sat on several advisory boards, research planning and funding review meetings, I have been concerned by how many policymakers, researchers and organisational leaders know so little of evaluation science and conflate it with health economic assessment and implementation science. Far more concerning, some of them think a health economic assessment supersedes an evaluation and negates the commissioning of an evaluation process. Obviously, I speak here of a healthcare services research context, but this issue may extend beyond this discipline. Therefore, I make a case here for stakeholders, including funding decision-makers and researchers alike, to distinguish between the disciplines of implementation science and evaluation science and the processes of evaluation and health economic assessment.

The healthcare sector is vast and complex, comprising multiple dimensions of study and implementation. It is driven by a constant need to maximize patient outcomes while ensuring the efficient use of resources. Implementation science and the evaluation process are both critical components within the realm of health and social sciences. They both aim to contribute to improved practices, interventions, and policies within various disciplines, particularly healthcare. While they may appear similar, they encompass different objectives, methodologies, and stages in implementing and assessing evidence-based interventions. Implementation science, also known as knowledge translation, is a multidisciplinary field that systematically integrates research findings and evidence-based practices into routine and everyday use. The goal is to improve the quality and effectiveness of health services, social programs, and policies. The fundamental underpinning of implementation science is to bridge the gap between research and practice, often referred to as the "know-do" gap. Implementation science utilizes various theories, models, and frameworks to identify, understand, and address barriers to evidence-based interventions' adoption, adaptation, integration, scale-up, and sustainability. It takes into account numerous factors, including the complexities of health systems, the variability of human behaviours, and the diversity of social and political contexts. On the other hand, the evaluation process is a systematic method used to assess the design, implementation, and utility of programs, interventions, or policies. The primary purpose of the evaluation is to judge the value or worth of something to guide decision-making and improve effectiveness. There are different types of evaluation, such as formative, summative, process, and outcome. Each type focuses on various stages of a program's life cycle. Evaluations may consider the fidelity of implementation, the outcomes of the intervention, and the cost-effectiveness, among other aspects. While implementation science and evaluation processes utilize mixed methods, their methodological emphases differ. Implementation science prioritizes process-oriented investigations, employing qualitative research to understand human behaviours and system complexities. In contrast, the evaluation process often emphasizes outcome measures, using quantitative methods to assess the degree to which program goals and objectives have been met. Health economic assessment, often referred to as health economic evaluation, is a tool used to compare the cost-effectiveness of different health interventions. This method evaluates different health programs' benefits relative to their costs, aiming to maximize health outcomes given a particular budget constraint. The health economic assessment primarily adopts a macroeconomic perspective, focusing on the healthcare system's overall cost-effectiveness. This assessment typically employs methods such as cost-effectiveness analysis (CEA), cost-utility analysis (CUA), or cost-benefit analysis (CBA). The value of health economic assessment lies in its ability to provide a comparative analysis of the efficiency of different healthcare interventions. Quantifying costs and outcomes (often in quality-adjusted life years or QALYs) provide a comprehensive view of the economic value of different healthcare choices. This is particularly valuable when resources are scarce, and there is a need to allocate them in a way that can yield the most significant health benefits for the most considerable number of people. On the other hand, health service evaluation focuses on assessing the quality of care delivered in a specific healthcare setting or by a particular healthcare service. It's a process that takes a micro view, examining individual services, care pathways, or providers. It aims to identify areas of improvement and highlight best practices, focusing on effectiveness, efficiency, and equity in care delivery. The measures in health service evaluation might include patient satisfaction, accessibility of care, timeliness, and adherence to clinical guidelines, among others. Health service evaluation aids in pinpointing gaps or deficiencies in care delivery that might not be evident from a macroeconomic view. This detailed scrutiny of specific services can lead to improvements in patient care, satisfaction, and overall health outcomes. It can also highlight systemic issues that might need addressing at a policy level. The principal differences between health economic assessment and health service evaluation stem from their varying focuses. The former adopts a broader perspective, taking into account the entire health system's economic balance. It often involves policy-level decisions concerning resource allocation, seeking to achieve the maximum health benefits per unit of cost across different healthcare interventions. Conversely, health service evaluation narrows its lens to the individual service, provider, or care pathway level. Its main goal is to improve the quality of care and patient satisfaction within specific healthcare services, not necessarily accounting for the broader economic implications of these improvements. It may, however, indirectly influence economic evaluations by identifying more effective or efficient practices that can then be implemented more broadly, thus improving cost-effectiveness. Thus, while each field (implementation science, health economic assessment, and the evaluation process) have a critical role in improving the quality and outcomes of health service delivery, they are very distinct from each other. One cannot afford to conflate each other but only at the expense of sound research and assessment. It is important for evaluators to be clear and loud in making the difference known while standing up for appropriate evaluation processes to be considered in health service projects and programs. In last week's Google I/O developer conference, there was an announcement that PaLM 2 (Google's latest generational large language model) will have multimodal capabilities. This means PaLM 2 can also interpret images and videos in addition to text interpretation and generation. Previously, Open AI announced that GPT-4 would have these capabilities too. In other words, the new generation of large language models will have multi-modal capabilities as a standard offering. How is this significant to the healthcare domain in which I operate?

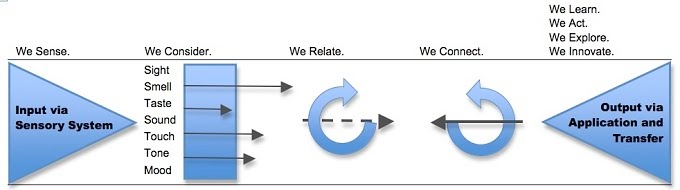

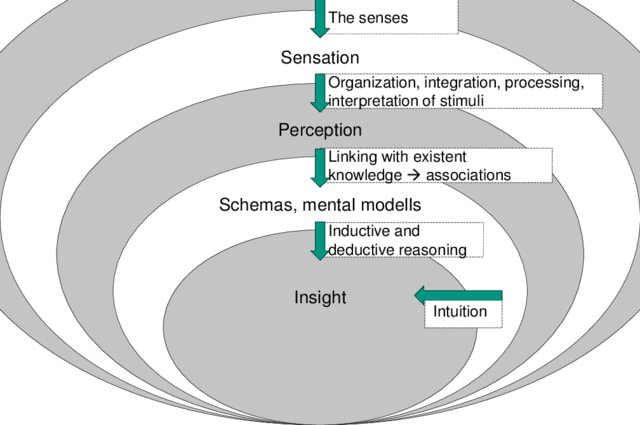

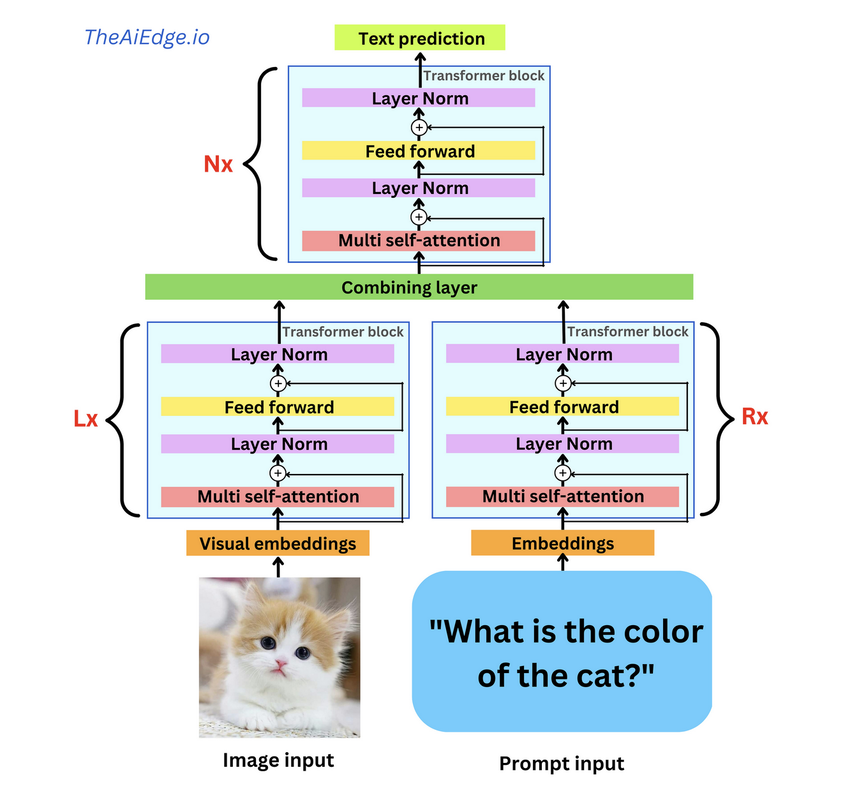

Medical practice, by default, operates on multi-modal functionality. A clinician must interpret the patient or laboratory records, take an oral history, undertake visual examination, and interpret waveform and radiological investigations. Collectively, these inform the clinician's diagnosis or management of the patient. The previous generation of AI models could only contribute to a narrow set of medical tasks, say electronic record analysis or medical image interpretation, not in combination. This was mainly due to how the machine learning models were trained (supervised learning/annotated/labelled process) and the intrinsic limitation of the algorithms (even advanced ones) to perform accurately on multi-modal datasets. While regulatory authorities and vendors had a relatively easy task of having the application certified for its task boundaries and safety, they really fulfilled a narrow set of the customers (health services, medical doctors...etc.) requirements. Considering the need to integrate these applications into existing information systems, the economies of scale and ROI were minimal, if not non-existent. The availability of multi-modal (and potentially multi-outcome) functionality may considerably change AI in the healthcare landscape. An ability of a single AI application to not only analyse a radiological investigation but link it back to the patient's history derived from analysis of the electronic health record and pathology investigations will be revolutionary. This will negate the need for stakeholders/purchasers to source multiple AI applications and become more accessible for the health service to set up a governance mechanism to monitor the deployment and delivery of AI-enabled services. At a clinical level, by utilizing multi-modal AI, physicians have a more comprehensive view when making a diagnosis. Now such applications are not far from entering the commercial space. In the research domain, I was last year fascinated by this study from South Korea, where the authors demonstrated a multi-modal algorithm which adopts a BERT-based architecture to maximize generalization performance for both vision-language understanding tasks (diagnosis classification, medical image-report retrieval, medical visual question answering) and vision-language generation task (radiology report generation). As you may know, BERT is a masked language model based on the Transformer architecture. Since this study, I have seen a wave of studies showcasing the efficacy of multimodal AI, such as this and this. Back to Google's announcement last week, as part of the customised offering of PaLM 2 in various domains, Med-PaLM 2 developed to generate medical analysis was demonstrated too. As per this blog, Med-PaLM 2 will interpret and generate text (answer questions and summarise insights) and have multi-modal functionalities to analyse/interpret medical image modalities. Considering GPT-4 can analyse images and offers access to their API to external developers, it is not hard to foresee multi-modal medical AI applications in the market. Of course, as I see it, multi-modal AI is not going to be restricted to LLM architecture, and there are different ways to develop such applications. Also, it is not enough to have multi-modal functionality; you also need to have multi-outcome features. I write this article not only to signal to healthcare stakeholders (policymakers, funders, health services. Etc.) about the future of medical AI software but also to forewarn narrow use case medical AI developers to pivot their development strategy to multi-modal AI functionality or be swept away as the floodgates of multi-modal AI is unleased. This week, the Future of Life Institute (FLI) issued an open letter calling for technology businesses and Artificial Intelligence (AI) research laboratories to halt any work on any AI more advanced than GP4. The letter, signed by some famous names, warns about the dangers advanced AI can pose without appropriate governance and quotes from the Asilomar AI principles issued in 2017. While well-intentioned in warning about the dangers posed by advanced AI, the letter is a bit premature as their target, the Large Language Models (LLMs), are no closer to Artificial General Intelligence (AGI) than we are closer to humans settling on Mars. Let me explain why. If we consider human intelligence the benchmark for how AI systems are modelled, we must first understand how humans learn. This process is succinctly captured in the below illustration (Greenleaf & Wells-Papanek, 2005). Here we can observe that we utilise our senses to draw upon inputs from the environment and then utilise our cognitive process to relate the information to previous memories or learning and then apply it to the current situation and act accordingly. While the actual process in the human brain, incorporating short-term and long-term memories and the versatile cognitive abilities of different parts of the brain, is more complex, it is essential to note that the key steps are the 'relation' and 'connection' to 'memories' or 'existing knowledge, leading to insights as illustrated in the below figure (Albers et al., 2012). Now let's look at how LLMs operate. These models process data by breaking it into smaller, more manageable tokens. These tokens are then converted into numerical representations that the model can work with using tokenization. Once the data has been tokenized, the model uses complex mathematical functions and algorithms to analyze and understand the relationships between the tokens. This process is called training, and it involves feeding the model large amounts of data and adjusting its internal parameters until it can accurately predict the next token in a sequence, given a certain input. When the model is presented with new data, it uses its trained parameters to generate outputs by predicting the most likely sequence of tokens following the input. This output can take many forms, depending on the application - for example, it could be a text response to a user's query or a summary of a longer text. Overall, large language models use a combination of statistical analysis, machine learning, and natural language processing techniques to process data and generate outputs that mimic human language. This process is illustrated in this representation of GPT4 architecture, where in addition to text, images are utilised as input (source: TheAIEdge.io) AGI refers to the ability of an AI system to perform any intellectual task that a human can. While language is an essential aspect of human intelligence, only one component of the broader spectrum of capabilities defines AGI. In other words, language models may be proficient at language tasks but lack the versatility and flexibility to perform tasks outside their training data.

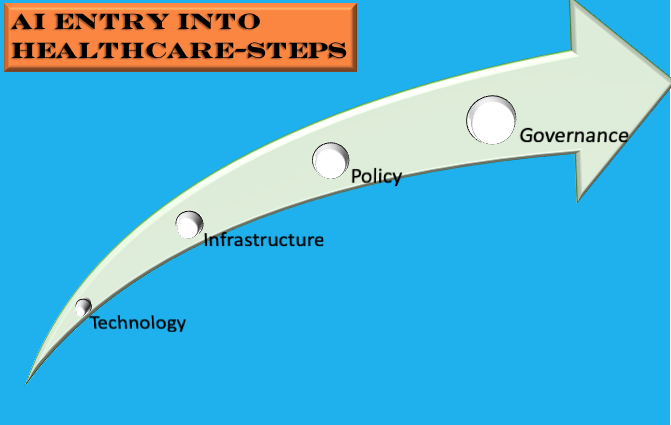

One of the primary limitations of large language models is their lack of generalization. These models are trained on large amounts of data and can generate impressive results within their trained domain. However, they struggle to apply this knowledge to new and unseen tasks. This limitation is because language models are trained through supervised learning, giving them a specific task and corresponding data to learn from. As a result, these models cannot reason or make decisions based on broader contexts. Another limitation of language models is their lack of common sense. While these models can generate coherent text and answer some basic factual questions, they cannot understand the world as humans do. For instance, they may be able to generate a recipe for a cake, but they cannot understand the implications of adding too much salt or sugar to the recipe. Furthermore, language models cannot interact with the physical world. AGI systems must be able to interact with the world as humans do. They must be able to perceive their surroundings, reason about the objects and people around them, and take appropriate actions. Language models are limited to processing text and cannot interact with the world meaningfully. Importantly, language models cannot retain memories (whether short-term or long-term), which are so essential to human learning and intelligence. So an autoregressive approach that language models adopt by analysing their training data is not a substitute for human learning. The road to AGI for large language developers is to create larger models supported by significant computational resources. These models are not just complex in their parameters but are environmentally unfriendly. Critically, they are black-box models, which even currently available explainable AI frameworks cannot scrutinise. With some LLM developers indicating they will not make the architecture and training process available to the public, it amounts to a selfish move and a scary development for the general public and the AI community. LLMs can be used to generate text that is designed to mislead or deceive people. This could spread false information, manipulate public opinion, or incite violence. LLMs can be used to create deep fakes that are very realistic, which could be used to damage someone's reputation or spread misinformation. This could lead to job losses and economic disruption. It could also lead to a concentration of power in the hands of a few companies that control the LLMs. LLMs are trained on data collected from the real world, which can contain biases. If these biases are not identified and addressed, they could be embedded in the LLMs and lead to biased systems against certain groups of people. LLMs are complex systems that are difficult to understand and secure. This makes them vulnerable to attacks by malicious actors. These issues may have led to the aforementioned letter, but to assume that LLMs are the next step to AGI is incomprehensible. First, LLMs cannot understand the meaning of language in the same way humans do. They can generate text that is grammatically correct and factually accurate, but they do not have the same level of understanding of the world as humans. Second, LLMs are not able to generalize their knowledge to new situations. They are trained on a specific set of data and can only perform tasks they have been trained on. Third, LLMs cannot learn and adapt to new information in the same way humans do. They are trained on a fixed set of data and cannot learn new things without being explicitly programmed to do so. Does intelligence have to be modelled regarding how humans learn? Couldn't alternative models of intelligence be as well as useful? I have argued for this in the past, but is this something we want? If we can't comprehend how an intelligence model works, it is a recipe for disaster if we can't control it anymore (read AI singularity). The most practical and human-friendly approach is developing intelligence models that align with human learning. While daunting and perhaps not linear, this path presents a more benign approach vis a vis explainability, transparency, humane, and climate-friendly principles.  On a Sunday morning in our Aussie summer, as I mull about the week and year ahead, I thought I would stretch my mind to consider how AI would be used in various industries in the future. I generally focus on AI applications in healthcare in my practice and will continue to do so but for once I wanted to hazard some predictions about the impact of AI generally ten years from now. Consider it is 29th January 2033 and as you cast your analytical mind across the business, healthcare, automotive, finance, judicial, and arts sectors, you note the following. Robots: The presence of robots has expanded beyond the industrial sector. Domestic robots are ubiquitous with their use in various domestic tasks. Also, robots are being used for security patrols, home deliveries and providing companionship and care to relevant people. Virtual Actors: Human actors are now competing with AI (virtual actors) copyrighted to studios or companies reminiscent of the early twentieth century when studios owned actors. AI-powered animation, NLP and special effects have advanced to the point, one cannot distinguish between human and AI-generated actors on the screen diminishing the need to rely on fickle celebrities for screen productions. Art: AI-generated art has become a phenomenon of its own, with 'augmented art' becoming sought after. With it becoming practically impossible to distinguish between human and AI-generated art, it is accepted that any painting produced after 2030 are entirely AI-generated or a hybrid of human and AI talent. To enable the generation of high-quality art, competitions, where human artists equipped with AI software are required to deliver art as per themes, are offered. Judiciary: Most of the non-serious or civil litigations are analysed and abjudicated by 'AI Judges'. With the backlog of cases in many civil and family courts, authorities have introduced AI-driven applications to screen and make recommendations or rulings. These applications draw upon jurisprudence and best practice to suggest recommendations or make rulings. To make these 'AI Judges' acceptable to the community, human judiciary panels provide oversight. Robo-Taxis: In most developed countries' urban centres, taxis are now self-driven with a centralised command centre directing the vehicles to customers' to-and-fro destinations upon request. These robot taxis in addition to electric powered also have hydrogen fuel options and can cover a large range of distances. AI Clinics: These multi-model and multi-outcome AI-driven health centres offer screening/triaging and low-risk clinical care to registered patients and have become the default clinics in many geographical areas across the world. Continued healthcare workforce shortages and raising healthcare expenditure led authorities in the UK and China to pilot these centres in their cities in 2029-2030. Independent evaluation and peer-reviewed studies published in the Lancet and NEJM in 2031 indicated efficacious, safe and high-quality care for certain medical conditions delivered at low cost. Drawing upon these several entrepreneurs and companies have developed portable environmentally friendly facilities with integrated multi-modal AI and telefacilities. Governments have negotiated with these suppliers to trial these facilities in their urban and regional centres. Change to the Name: Well, AI is still called AI in 2033, but when expanded it is described as 'Augmented Intelligence'. Experiments to integrate AI into the human brain as brain implants and offered as augmented tools through mixed reality devices have led to an international consensus for AI to be described as 'Augmented Intelligence'. Universal Income: With much of the blue-collar and significant white-collar jobs being delivered by AI/Robots, some governments have introduced legislation to protect the earning capacity of their citizens through the 'Universal Income' framework, where all of their citizens (unless they opt out) draw upon a legislated income. Revenue for this spend is generated from a mix of taxes, royalties, and trade income. The availability of universal income has led many of their citizens to pursue their real interests and has spawned an era of innovations and inventions. Post-Note: A year ago, I would have read this article and placed it in the basket of 'Science Fiction'. While not purporting to have absolute certainty of the future, especially as a follower of Quantum Physics/Mechanics, I do consider the current progress with AI and Robotics will lead us to these outcomes in some form or another. In any case, if you and I are around in 2033 let us revisit this article :-)  Introduction As medical care evolves, clinicians and researchers are exploring the use of technology to improve the quality and effectiveness of medical care. In this regard, technology is being used to deliver precision medicine. This form of medicine is a new approach that focuses on using genomic, environmental and personal data to customize and deliver precise form of medical treatment. Hence the name ‘precision medicine’. One of the most influential factors, in recent years, in delivering precision medicine has been Artificial Intelligence (AI). In specific one of its forms Machine Learning (ML). ML, which uses computation to analyze and interpret various forms of medical data to identify patterns and predict outcomes has shown increasing success in various areas of healthcare delivery. In this article, I discuss how computer vision and natural language processing, which use ML can be used to deliver precision medicine. I also discuss the technical and ethical challenges associated with the approaches and what the future holds if the challenges are addressed. Image Analysis Various forms of medical imaging techniques like X-rays, CT, MRI and Nuclear imaging techniques are being used by clinicians to assist their diagnosis and treatment of various conditions ranging from cancers to simple fractures. The importance of these techniques in devising specific treatments has become critical in recent years. However, the dependency on a limited subset of trained medical specialists (Radiologists) to interpret and confirm the images has meant in many instances increase with the diagnosis and treatment times. The task of classifying and segmenting medical images can not only be tedious but take a lot of time. Computer Vision (CV), a form of AI that enables computers to interpret images and relate what the images are, has in the recent years shown a lot of promise and success. CV is now being applied in medicine to interpret radiological, fundoscopic and histopathological images. The most publicized success of recent years has been the interpretation of retinopathy images to diagnose diabetic and hypertensive retinopathy. The use of CV, powered by neural networks (an advanced form of ML), is said to take over the tedious task of segmenting and classifying medical images and enable preliminary or differential diagnosis. This approach is stated not only to accelerate the process of diagnosis and treatment but also provide more time for the radiologists to focus on complex imaging interpretations. Natural Language Processing As with CV, Natural Language Processing (NLP) has had a great impact on society in the form of voice assistants, spam filters, and chat-bots. NLP applications are also being used in healthcare in the form of virtual health assistants and in recent years have been identified to have potential in analyzing clinical notes and spoken instructions from clinicians. This ability of NLP can lessen the burden for busy clinicians who are encumbered by a need to document all their patient care in electronic health records (EHRs). By freeing up the time in writing copious notes, NLP applications can enable clinicians to focus more of their time with patients. In the recent period NLP techniques have been used to analyze even unstructured (free form and written notes) data, which makes it useful in instances where written data is not available in the digital form or there are non-textual data. By integrating NLP applications in EHRs, the workflow and delivery of healthcare can be accelerated. Combination of Approaches Precision medicine is premised on customization of medical care based on individual profile of patients. By combining NLP and CV techniques, the ability to deliver precision medicine s greatly increased. For example, NLP techniques can scroll through past medical notes to identify previously diagnosed conditions and medical treatment and present the information in a summary to doctors even as the patient presents to the clinic or to the emergency department. Once in the clinic or emergency department, NLP voice recognition applications can analyze conversation between the patient and clinicians and document it in the form of patient notes for the doctor to review and confirm. This process can free up time for the doctor and ensure accuracy of notes. As the doctor identifies the condition affecting the patient and relies on confirmation through relevant medical imaging, automated or semi-automated CV techniques can accelerate the confirmation process. Thus, a cohesive process that can accelerate the time in which the patient receives necessary medical treatment. Let us see how this works in a fictitious example. Mr Carlyle, an avid cyclist, meets with an accident on his way to work when an automobile swerves into the bike lane and flings him from his bicycle. The automobile driver calls in an ambulance when he notices Mr Carlyle seated and grimacing with pain. The ambulance after arrival having entered his unique patient identifier number, which is accessed from his smartwatch, rushes him to the nearest emergency department. The AI agent embedded in the hospital’s patient information system identifies Mr Carlyle through his patient identifier number and pulls out his medical details including his drug allergies. This information is available for the clinicians in the emergency department to review even as Mr Carlyle arrives. After being placed in an emergency department bay, the treating doctor uses an NLP application to record, analyze and document the conversation between her and Mr Carlyle. This option allows the doctor to focus most of her time on Mr Carlyle. The doctor suspects a fracture of the clavicle and has Mr Carlyle undergo an X-ray. The CV application embedded in the imaging information system has detected a mid-shaft clavicular fracture and relays the diagnosis back to the doctor. The doctor, prompted by an AI clinical decision support application embedded in the patient information system, recommends immobilization and a sling treatment for Mr Carlyle along with pain killers. His pain killer excludes NSAIDs as the AI agent has identified he is allergic to aspirin. Challenges The above scenario while presenting a clear example of how AI, in specific CV and NLP applications, can be harnessed to deliver prompt and personalized medical care is yet contingent on the technologies to deliver such outcomes. Currently, CV techniques have not achieved the confidence of regulatory authorities nor clinicians to allow automated medical imaging diagnosis (except in minor instances such as diabetic retinopathy interpretation) and neither are NLP applications embedded in EHRs to allow automatic recording, analysis and recording of patient conversations. While some applications have been released in the market to analyze unstructured data, external validation and wide acceptance of these type of applications are some years away. Coupled with this technical and regulatory challenges is the ethical challenges of enabling autonomy of non-human agents to guide and deliver clinical care. Further issues may arise due to the use of patient identifiers to extract historical details even if it is for medical treatment if the patient hasn’t consented so. Yet, the challenges can be overcome as AI technology improves and governance structures to protect patient privacy, confidentiality and safety are established. As focus on the ethics of application of AI in healthcare increases and technological limitations of AI application get resolved, the fictitious scenario may become a reality not too far into the future. Conclusion There is a natural alignment between AI and precision medicine as the power of AI methods such as NLP and CV can be leveraged to analyze bio-metric data and deliver personalize medical treatment for patients. With appropriate safeguards, the use of AI in delivering precision medicine can only benefit both the patient and clinician community. One can based on the rapidly evolving AI technology predict the coming years will see wider adaption of precision care models in medicine and thus AI techniques. With recent developments in regard to AI in Healthcare, one could be mistaken that the entry of AI in healthcare is inevitable. Recent developments include two major studies, one where machine learning classifiers used for hypothetico-deductive reasoning were found to be as accurate as paediatricians and the other one where a deep-learning based automated algorithm outperformed thoracic radiologists in accuracy and was externally validated in multiple sites. The first study is significant in that machine learning classifiers are now proven to be not only useful for medical imaging interpretation but also useful in extracting clinically relevant information from electronic patient records. The second study was significant in that the algorithm could detect multiple abnormalities in chest x-rays (useful in real world settings) and was validated multiple times using external data-sets. Coupled with these developments, we now have the FDA gearing up for the inevitable use of AI software in clinical practice by developing a draft framework anticipating modifications to AI medical software. Also, we now have medical professional bodies across the world welcoming the entry of AI in medicine albeit cautiously and by issuing guidelines. Like this one and this one. Compared to even a year ago, it seems AI has definitely had a resounding impact on healthcare. Even the venerable IEEE is keeping track of where AI is exceeding the performance of clinicians. However, I most certainly think we have yet seen the proper entry of AI in healthcare. Let me explain why and what needs to be done to enable this? While there is strong evidence emerging about the usefulness of machine learning, especially neural networks in interpreting multiple medical modalities, the generalization of such successes is relatively uncommon. While there has been progress with the ability to minimize generalization error (through avoidance of over-fitting) and understanding how generalization and optimization of neural networks work, it still remains the fact that prediction of class labels outside trained data sets is not for certain. In medicine, this means deep learning algorithms that have shown success in certain contexts are not guaranteed to deliver the same success even with similar data in a different context. There is also the causal probabilistic approach of current machine learning algorithms, which do not necessarily align with the causal deterministic model of diagnostic medicine. I have covered this issue previously here. Even if we accept that machine learning/deep learning models with current limitations are useful in healthcare, there is the fact there is limited readiness of hospitals/health services to deploy these models in clinical practice. The lack of readiness spans infrastructure, policies/guidelines and education. Also, governments and regulatory bodies in many countries don't have specific policies and regulatory frameworks to guide the application of AI in healthcare. So, what has to be done? As illustrated below, the following steps have to be adopted for us to see AI bloom in the healthcare context. The first step is development and use of appropriate AI Technology in Medicine. This means ensuring there is validity and relevance of the algorithms being used to address the healthcare issues. For example, if a convolutional neural network model has shown success in screening pulmonary tuberculosis through chest x-ray interpretation it doesn't necessarily mean it is equipped to identify other chest x-ray abnormalities say atelectasis or pneumothorax. So the model should be used for the exact purpose it was trained. Also, the model trained with a labelled x-ray data-set from a particular region has to be validated with a data-set from another region and context. Another issue that pertains to technology is the type of machine learning model being used. While deep learning seems to be in-vogue, it is not necessarily appropriate in all medical contexts. Because of the limitations it poses with explainability, other machine learning models like Support Vector Machines, which lend themselves to interpretability should be considered.

The second step in facilitating the entry and establishment of AI in healthcare is Infrastructure. What do I mean by infrastructure? At this stage, even in developed countries, hospitals do not necessarily have the digital platforms and data warehouse structures for machine learning models to successfully operate. Many hospitals are still grappling with the roll-out of electronic health records. This platform will be essential for machine learning algorithms to mine and query patient data. Also, to train many machine learning models structured data is necessary (some models can work on unstructured data as this application). This data structuring process includes data labelling and creating data warehouses. Not all hospitals, facing budget crunches, have this infrastructure/capability. Further, the clinical and administrative workforce and patient community are to be educated about AI if AI applications will be used in clinical practice and healthcare delivery. How many healthcare organizations have this infrastructure readiness? I doubt many. So infrastructural issues are most certainly necessarily to be addressed before one can think of use of AI in the healthcare context. The next step, Policy, is also critical. Policy covers both governmental and institutional strategies to guide the deployment of AI for healthcare delivery, and regulatory frameworks to facilitate the entry of and regulate AI medical software in the market. There is definitely progress here with many governments, national regulatory bodies, medical professional bodies and think tanks issuing guidance about this matter. Yet, there are gaps in that many of these guidance documents are theoretical or cursory in nature or not linked to existing infrastructure. Worse yet, is in countries where such policies and guidance don't exist at all. Also, an issue is limited funding mechanisms to support AI research and commercialization, which has significantly hampered innovation or indigenous development of AI medical applications. The final step that needs to be considered is Governance. This step not only covers the regulation frameworks at the national level (necessary to scrutinize and validate AI applications) but also monitoring and evaluation frameworks at the institutional level. It also covers the requirement to mitigate risk involved in the application of AI in clinical care and the need to create patient-centric AI models. The latter two steps are vital in clinical governance and continuous quality improvement. Many institutions have issued ethical guidelines for the application of AI in Healthcare, but I am yet to see clinical governance models for the use of AI in clinical care. It is critical that clinical governance models for the application of AI in healthcare delivery are developed. Addressing the steps, I list above: Technology, Infrastructure, Policy and Governance appropriately will most certainly facilitate the entry and establishment of AI in Healthcare. Also, with the accelerated developments in AI technology and increasing interest in AI by policy makers, clinical bodies and healthcare institutions, maybe we are not that far away from seeing this occur. |

AuthorHealth System Academic Archives

December 2023

Categories |

RSS Feed

RSS Feed