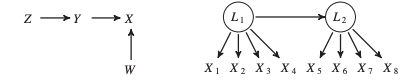

Recently a twitter war (OK, maybe a vigorous debate) erupted between proponents of deep learning models and long-standing critics of deep learning approaches in AI (I won't name the lead debaters here, but a simple Google search will help you identify them). After following the conversation and my own study of the maths of probabilistic deep learning approaches, I have been thinking about alternative approaches to current bayesian/back-propagation based deep learning models. The versatility and deep impact (pun intended) of neural network models in pattern recognition, including in medicine, is well documented. Yet, the statistical approach/maths of deep learning is largely based on probability inference/Bayesian approaches. The neural networks of deep learning remember classes of relevant data and recognise to classify the same or similar data the next time around. It is kind of what we term 'generalisation' in research lingo. Deep learning approaches have received acclaim because they are able to better capture invariant properties of the data and thus achieve higher accuracies compared to their shallow cousins (read supervised machine learning algorithms). As a high-level summary, deep learning algorithms adopt a non-linear and distributed representation approach to yield parametric models that can perform sequential operations on data that is fed into it. This ability has had profound implications in pattern recognition leading to various applications such as voice assistants, driver-less cars, facial recognition technology and image interpretation not to mention the development of the incredible AlphaGo, which has now evolved into AlphaGo zero. The potential of application of deep learning in medicine, where big data abounds, is vast. Some recent medical applications include EHR data mining and analysis, medical imaging interpretation (the most popular one being diabetic retinopathy diagnosis), medical voice assistants, and non-knowledge based clinical decision support systems. Better yet, the realisation of this potential is just beginning as newer deep learning algorithms are developed in the various Big-Tech and academic labs. Then what is the issue? Aside from the often cited 'interpretability/black-box' issue associated with neural networks (I have previously written even this may not be a big issue with solutions like attention mechanism, knowledge injection and knowledge distillation now being available) and it's limitations in dealing with hierarchal structures and global generalisation, there is the 'elephant in the room' i.e. no inherent representation of causality. In medical diagnosis, probability is important, but causality is more so. In fact the whole science of medical treatment is based on causality. You don't want to use doxycycline instead of doxorubicin to treat Hodgkin's Lymphoma or the vice versa for Lyme Disease. How do you know if the underlying is Hodgkin's Lymphoma or Lyme Disease? I know Different diseases and different presentations, of course. However, the point is the diagnosis is based on a combination of objective clinical examination, physical findings, sero-pathological tests, medical imaging. All premised on a causation sequence i.e. Borrelia leads to Lyme Disease and EB virus/family history leads to Hodgkin's. This is why we adopt Randomised Control Tests, even with its inherent faults, as the gold standard for incorporation of evidence-based treatment approaches. If understanding of causal mechanisms is the basis of clinical medicine and practice, then there is so much deep learning approaches can do for medicine. This is why I believe there needs to be a serious conversation amongst AI academics and the developer community about adoption of 'causal discovery algorithms' or better yet 'hybrid approaches (a combination of probabilistic and causal discovery approaches)'. We know 'correlation is not causation', yet under appropriate conditions we can make inferences from correlation to causation. We now have algorithms that search for causal structure information from data. They are represented by causal graphical models as illustrated below: Figure I. Acyclic Graph Models (Source: Malinsky and Danks, 2017) While the causal search models do not provide you a comprehensive causal list, they provide enough information for you to infer a diagnosis and treatment approach (in medicine). There have been numerous successes documented with this approach and different causal search algorithms developed over the past many decades. Professor Judea Pearl, one of the godfathers of causal inference approaches and an early proponent of AI has outlined structural causal models, a coherent mathematical basis for the analysis of causes and counterfactuals. You can read an introduction version here: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2836213/ I think it is probably a time now to pause and think, especially in the context of medicine, if a causal approach is better in some contexts than a deep learning gradient informed approach. Even better if we can think of a syncretic approach. Believe me, I am an avid proponent of the application of deep learning in medicine/healthcare. There are some contexts, where back-propagation is better than hypothetico-deductive inference especially when terrabytes/petabytes of unstructured data accumulates in the healthcare system. Also, there are limitations to causal approaches. Sometimes probabilistic approaches are not that bad as outlined by critics. You can read a defence of probabilistic approaches and how to overcome its limitations here: http://www.unofficialgoogledatascience.com/2017/01/causality-in-machine-learning.html However, close-mindedness to an alternative approach to probabilistic deep learning models and reliance on this approach is a sure-bet to failure of realisation of the full potential of AI in Medicine and even worse failure of the full adoption of AI in Medicine. To address this some critics of prevalent deep learning approaches are advocating for a Hybrid approach, whereby a combination of deep learning and causal approaches are utilised. I strongly believe there is merit in this recommendation, especially in the context of application of AI in medicine/healthcare. A development in this direction is the 'Causal Generative Neural Network'. This framework is trained using back-propagation but unlike previous approaches, capitalises on both conditional independences and distributional asymmetries to identify bivariate and multivariate causal structures. The model not only estimates a causal structure but also differential generative model of the data. I will be most certainly following the developments with this framework and more generally hybrid approaches especially in the context of it's application in medicine but I think all those interested in the application of AI in Medicine should be having a conversation about this matter. Reference: Malinsky, D. & Danks, D. (2017). Causal discovery algorithms: A practical guide. Philosophy Compass 13: e12470, John Wiley & Sons ltd.

1 Comment

|

AuthorHealth System Academic Archives

December 2023

Categories |

RSS Feed

RSS Feed