|

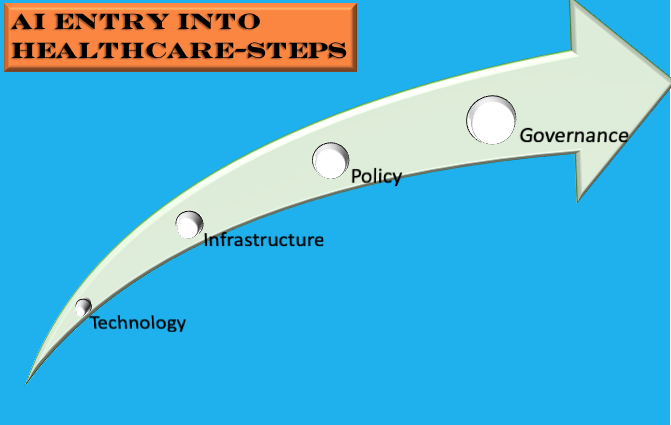

With recent developments in regard to AI in Healthcare, one could be mistaken that the entry of AI in healthcare is inevitable. Recent developments include two major studies, one where machine learning classifiers used for hypothetico-deductive reasoning were found to be as accurate as paediatricians and the other one where a deep-learning based automated algorithm outperformed thoracic radiologists in accuracy and was externally validated in multiple sites. The first study is significant in that machine learning classifiers are now proven to be not only useful for medical imaging interpretation but also useful in extracting clinically relevant information from electronic patient records. The second study was significant in that the algorithm could detect multiple abnormalities in chest x-rays (useful in real world settings) and was validated multiple times using external data-sets. Coupled with these developments, we now have the FDA gearing up for the inevitable use of AI software in clinical practice by developing a draft framework anticipating modifications to AI medical software. Also, we now have medical professional bodies across the world welcoming the entry of AI in medicine albeit cautiously and by issuing guidelines. Like this one and this one. Compared to even a year ago, it seems AI has definitely had a resounding impact on healthcare. Even the venerable IEEE is keeping track of where AI is exceeding the performance of clinicians. However, I most certainly think we have yet seen the proper entry of AI in healthcare. Let me explain why and what needs to be done to enable this? While there is strong evidence emerging about the usefulness of machine learning, especially neural networks in interpreting multiple medical modalities, the generalization of such successes is relatively uncommon. While there has been progress with the ability to minimize generalization error (through avoidance of over-fitting) and understanding how generalization and optimization of neural networks work, it still remains the fact that prediction of class labels outside trained data sets is not for certain. In medicine, this means deep learning algorithms that have shown success in certain contexts are not guaranteed to deliver the same success even with similar data in a different context. There is also the causal probabilistic approach of current machine learning algorithms, which do not necessarily align with the causal deterministic model of diagnostic medicine. I have covered this issue previously here. Even if we accept that machine learning/deep learning models with current limitations are useful in healthcare, there is the fact there is limited readiness of hospitals/health services to deploy these models in clinical practice. The lack of readiness spans infrastructure, policies/guidelines and education. Also, governments and regulatory bodies in many countries don't have specific policies and regulatory frameworks to guide the application of AI in healthcare. So, what has to be done? As illustrated below, the following steps have to be adopted for us to see AI bloom in the healthcare context. The first step is development and use of appropriate AI Technology in Medicine. This means ensuring there is validity and relevance of the algorithms being used to address the healthcare issues. For example, if a convolutional neural network model has shown success in screening pulmonary tuberculosis through chest x-ray interpretation it doesn't necessarily mean it is equipped to identify other chest x-ray abnormalities say atelectasis or pneumothorax. So the model should be used for the exact purpose it was trained. Also, the model trained with a labelled x-ray data-set from a particular region has to be validated with a data-set from another region and context. Another issue that pertains to technology is the type of machine learning model being used. While deep learning seems to be in-vogue, it is not necessarily appropriate in all medical contexts. Because of the limitations it poses with explainability, other machine learning models like Support Vector Machines, which lend themselves to interpretability should be considered.

The second step in facilitating the entry and establishment of AI in healthcare is Infrastructure. What do I mean by infrastructure? At this stage, even in developed countries, hospitals do not necessarily have the digital platforms and data warehouse structures for machine learning models to successfully operate. Many hospitals are still grappling with the roll-out of electronic health records. This platform will be essential for machine learning algorithms to mine and query patient data. Also, to train many machine learning models structured data is necessary (some models can work on unstructured data as this application). This data structuring process includes data labelling and creating data warehouses. Not all hospitals, facing budget crunches, have this infrastructure/capability. Further, the clinical and administrative workforce and patient community are to be educated about AI if AI applications will be used in clinical practice and healthcare delivery. How many healthcare organizations have this infrastructure readiness? I doubt many. So infrastructural issues are most certainly necessarily to be addressed before one can think of use of AI in the healthcare context. The next step, Policy, is also critical. Policy covers both governmental and institutional strategies to guide the deployment of AI for healthcare delivery, and regulatory frameworks to facilitate the entry of and regulate AI medical software in the market. There is definitely progress here with many governments, national regulatory bodies, medical professional bodies and think tanks issuing guidance about this matter. Yet, there are gaps in that many of these guidance documents are theoretical or cursory in nature or not linked to existing infrastructure. Worse yet, is in countries where such policies and guidance don't exist at all. Also, an issue is limited funding mechanisms to support AI research and commercialization, which has significantly hampered innovation or indigenous development of AI medical applications. The final step that needs to be considered is Governance. This step not only covers the regulation frameworks at the national level (necessary to scrutinize and validate AI applications) but also monitoring and evaluation frameworks at the institutional level. It also covers the requirement to mitigate risk involved in the application of AI in clinical care and the need to create patient-centric AI models. The latter two steps are vital in clinical governance and continuous quality improvement. Many institutions have issued ethical guidelines for the application of AI in Healthcare, but I am yet to see clinical governance models for the use of AI in clinical care. It is critical that clinical governance models for the application of AI in healthcare delivery are developed. Addressing the steps, I list above: Technology, Infrastructure, Policy and Governance appropriately will most certainly facilitate the entry and establishment of AI in Healthcare. Also, with the accelerated developments in AI technology and increasing interest in AI by policy makers, clinical bodies and healthcare institutions, maybe we are not that far away from seeing this occur.

2 Comments

Raj Srinivasan

14/10/2019 03:10:33 am

Is there a reference or source for the slide: technology, infrastructure, policy, governance. thanks

Reply

Sandeep Reddy

15/10/2019 05:00:05 pm

I developed the illustration, so source is myself :-)

Reply

Leave a Reply. |

AuthorHealth System Academic Archives

December 2023

Categories |

RSS Feed

RSS Feed