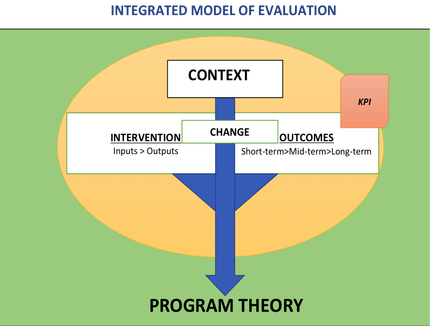

(I originally published this article in Linkedin) I. Background: Program Evaluation is a well-established methodology to assess the effectiveness and efficiency of programs. The methodology to undertake program evaluations has become diverse and complex over the years. However, program evaluation approaches can be grouped into two main categories: method-driven evaluation and theory-driven evaluation. With the method-driven approach, the emphasis is on the methods (qualitative or quantitative or mixed methods) employed to assess the success of the program. In contrast, the theory-driven evaluation emphasises the centrality of the program theory with methods being determined on their suitability to test the theory. Each approach has its strengths. With the method is driven evaluation, which is commonly used; the strengths are the approach is usually faster to implement and provides stakeholders easier to understand results. With the theory-driven evaluation, the results are context specific; thus, more accurate. However, each approach has its limitations too. The method driven approach, which in most instances does not emphasise the context or analyse the intervention provides results that are somewhat generic. The theory-driven approach takes a longer time to implement and provides results that are sometimes abstract or complex for stakeholders to understand and action. Also, some have criticised theory-driven approaches as too academic in its approach and not stakeholder friendly. II. Integrated Approach: The author through this paper presents an integrated model, which employs the best of the traditional method-driven and theory-driven approaches while addressing the limitations of each approach. The principle behind the development of the integrated model is to ensure stakeholders gain the greatest value from the commissioning of program evaluation. At the moment, most of the current evaluation approaches come with inherent limitations in addressing stakeholder needs. This is very unhelpful as all commissioned evaluations need not only to assess program but also present solutions to issues identified. By presenting weak or abstract results, that cannot be followed up; current models do not necessarily benefit stakeholders. To address this major limitation, the integrated model utilises components from both evaluation approaches that have, over the years, been attested as practical to assess programs. The model also includes innovative elements to ensure results delivered by implementing this model will be useful for stakeholders. The model includes traditional aspects of program evaluation such as program logic components: Inputs, Outputs, Outcomes and Key Performance Indicators (KPI). Also, it includes theory-driven elements such as Context and Program Theory, which are often ignored in traditional program evaluation models at the detriment of the validity of the results. By incorporating practical and useful components and leaving out esoteric concepts that indulge academics more than stakeholders, the integrated model ensures deployment of this model can be done in realistic time frames. The key focus areas of the IMoE are as follows: A. Program Theory: is a causal statement outlining the expected outcomes as a result of the program intervention in a particular context. The program theory is developed in ahead of the program assessment by consulting with stakeholders, literature and other sources. The program theory is then tested throughout the course of the evaluation using various appropriate methods. Incorporation of the program theory component in the IMoE ensures views of stakeholders are taken in advance, and a theory is developed about how the program is working or not. The program theory also considers the context in which the program was introduced thus tailoring assessment and solutions to be context specific. By refining or revising the theory later, the evaluation ensures assumptions are tested, and appropriate solutions are presented if the program is not working. B. Context: describes the situation in which the program has been introduced and is operating. The context includes geopolitical, economic and other scenarios that influence the program’s implementation and outcomes. The context component is often ignored in many generic evaluation approaches leaving assessments either incomplete or invalid. Program’s don’t succeed or fail merely because of the resources or change brought in by the program but also because of the context in which they were introduced; ignoring the context in assessment lessens the credibility of evaluation results. Therefore, the IMoE includes and emphasises description and assessment of context. C. Intervention: includes the resources and outputs being introduced through the program. Inputs and outputs are data collected through regular evaluations but the IMoE groups them under the ‘intervention ‘category to distinguish it from the ‘Change’ and ‘Outcomes’ component of the IMoE model. D. Change: incorporates variations that have occurred as a result of the program intervention. The changes can be positive or negative. Positive changes are those support program objectives, and the negative changes are those deter achievement of program objectives. The changes are to be stated in the preliminary program theory and assessed during the evaluation. E. Outcomes: are the end results of a program i.e. the objectives or the goals the program set to achieve. Depending on the duration of the program, short-term or mid-term or long-term outcomes are considered in the evaluation. The outcomes are not directly assessed but assessed through key-performance indicators (KPI) developed by the evaluator in consultation with stakeholders. The KPI can be included in the program theory, but this is optional. III. Discussion: As it can be gathered, the emphasis of the IMoE is not the methods or academic interests of the evaluator, but the usefulness of the evaluation results to stakeholders i.e. are the results valid and can they be acted upon? This is achieved through incorporation of the program theory and emphasis on the context, intervention and change. Also, the emphasis on the program theory means stakeholders are involved in the very onset while providing an opportunity for their assumptions to be tested through a vigorous approach. As the IMoE incorporates context in the construction of the program theory; it ensures the results are tailored to the particular program, organisation and scenario. Further, by incorporating program logic elements the IMoE ensures the implementation is practical, results are understandable to stakeholders, and the model is not restricted to impact assessments only. The IMoE has several strengths to it as it brings together the best of the traditional and theory-driven approaches of program evaluation. While it incorporates several components from both the approaches, the enjoining does not result in a complex or unwieldy model. In fact, a streamlined stakeholder-centric process is constructed. If there is a limitation to the IMoE, it is conceptual at this stage and is yet to be implemented. However, steps are being taken to employ IMoE in various healthcare settings. IV. Bibliography: 1) WCSRM. Introduction to Evaluation. Web Centre for Social Research Methods.2017. Available from: https://www.socialresearchmethods.net/kb/intreval.php 2) Chen H, Rossi PH. Introduction: Integrating theory into evaluation practice. In: Chen H, Rossi P, editors. Using theory to improve program and policy evaluations. Westport, CT: Greenwood Press; 1992. p. 2–11. 3) Chen H. Theory-driven evaluations. Newbury, CA: Sage Publications; 1990. p.328. 4) Hansen MB, Vedung E. Theory-based stakeholder evaluation. Am J Eval. 2010;31(3):295–313. 5) Marchal B, Van Belle S, Westhorp G, Peersman G. Realist Evaluation Approach [Internet]. 2015. Available from: http://betterevaluation.org/approach/realist_evaluation. 6) Porter S. Realist evaluation: an immanent critique. Nurs Philos [Internet]. 2015;16(4):239–51. Available from: http://doi.wiley.com/10.1111/nup.12100. 7) Cojocaru S. Clarifying the theory-based evaluation. Rev Cercet si Interv Soc. 2009; 26(1): 76–86. 8) White H. Theory-based impact evaluation: principles and practice. Journal of Development Effectiveness. 2009. p.271–84. 9) Christie C A, Alkin MC. The User-Oriented Evaluator’s Role in Formulating a Program Theory. Evaluation. 2003; 24(3): 373–85.

1 Comment

19/3/2022 12:25:13 pm

I like how you mentioned that the programs that you should follow are a positive impact on production. My brother said to me that he and a friend are preparing to do a program evaluation of the feedback on their business products and asked if I had any suggestions on what the best course of action would be. Thank you for your interesting article, and I'll be sure to inform him that they can consult a well-known program evaluation service for more specific information.

Reply

Leave a Reply. |

AuthorHealth System Academic Archives

December 2023

Categories |

RSS Feed

RSS Feed