|

In recent years, there has been a great deal of coverage about the dearth of PhD qualified AI data-scientists and the level of salaries qualified candidates can gain. One such piece can be found here: NYTimes article. Then you have universities complaining how their PhD qualified AI scientists are being poached by the industry thus demonstrating the demand for PhD qualified AI scientists: Guardian Article. Also, you have many universities opening numerous funded AI PhD positions such as this university: Leeds University Isn't it then obvious, a PhD in AI technology should be on all data scientists to do list. Well, as one who contemplated briefly to do a second PhD (focusing on swarm intelligence and multi-agent system in Healthcare) and who spent some time researching the necessity of completing a PhD to be across AI, I found it detrimental to undertake a PhD focusing on a specific AI algorithmic approach. Let me explain why?

0 Comments

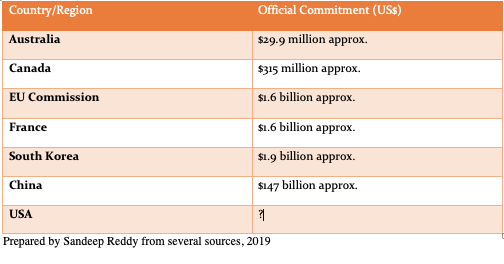

On 11th February, the US administration formalized the proposals made during last year's White House summit on AI through an executive order but there was no mention of the amount that will be set aside for investment in AI (except for statements about prioritization of investment in AI). This compared to official commitments by other countries/regions:

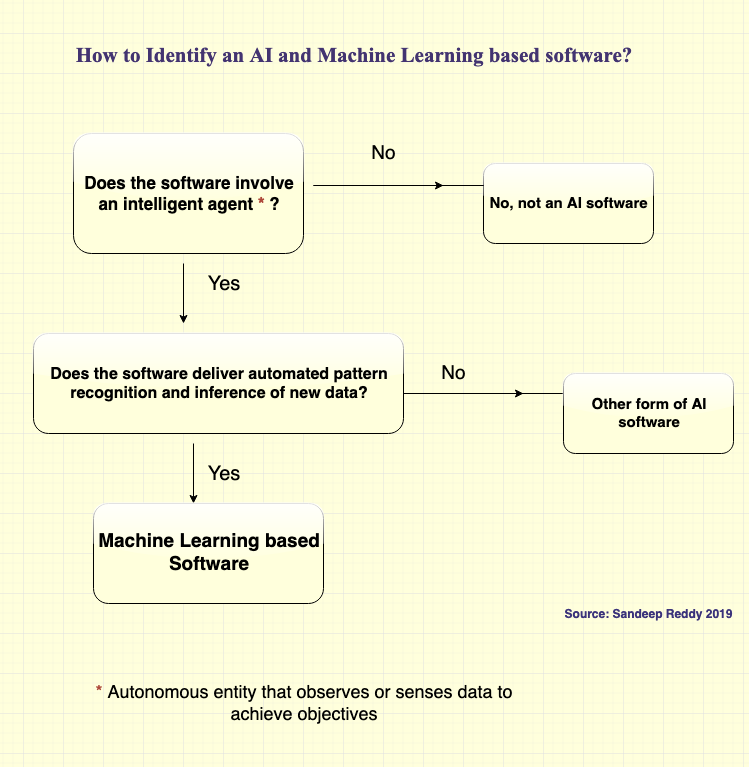

With the tendency nowadays for many 'software as service' providers to label their software as 'AI' or add a component labelled as AI, it is hard to distinguish what is a real AI based software and what is not? Some vendors state only machine learning (including deep learning) based software is real AI. However, AI is much more than machine learning and involves other forms such as reinforcement learning, swarm intelligence, genetic algorithms and even some forms of robots. To make it simple for clients/purchasers/organizations, I have developed this simple chart to identify an AI and Machine Learning based software. This chart does not cover all forms of AI software's as currently most AI software's being offered in the market are machine learning based software's (I am creating a more comprehensive chart, which encompasses all the schools and tools of AI including symbolism, connectionism, analogism, bayesianism and evolutionism approaches). I also wanted to keep this chart simple so it is easy to use by buyers (so you data scientists and mathematicians, feel free to comment but be forgiving). Anyway, here you are:

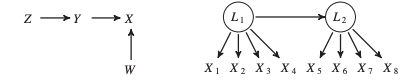

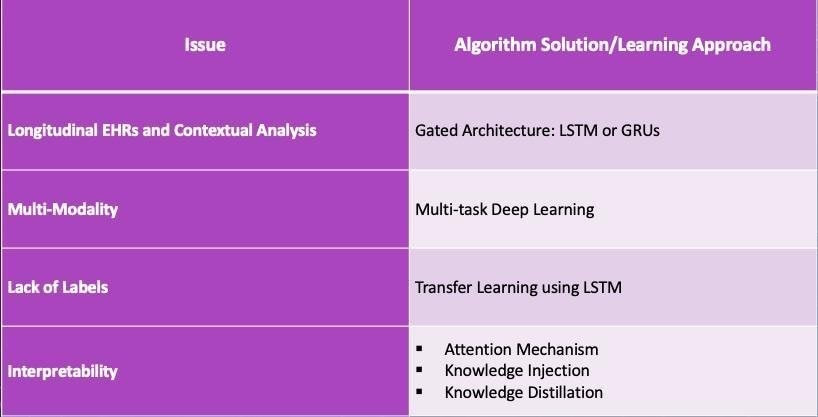

Recently a twitter war (OK, maybe a vigorous debate) erupted between proponents of deep learning models and long-standing critics of deep learning approaches in AI (I won't name the lead debaters here, but a simple Google search will help you identify them). After following the conversation and my own study of the maths of probabilistic deep learning approaches, I have been thinking about alternative approaches to current bayesian/back-propagation based deep learning models. The versatility and deep impact (pun intended) of neural network models in pattern recognition, including in medicine, is well documented. Yet, the statistical approach/maths of deep learning is largely based on probability inference/Bayesian approaches. The neural networks of deep learning remember classes of relevant data and recognise to classify the same or similar data the next time around. It is kind of what we term 'generalisation' in research lingo. Deep learning approaches have received acclaim because they are able to better capture invariant properties of the data and thus achieve higher accuracies compared to their shallow cousins (read supervised machine learning algorithms). As a high-level summary, deep learning algorithms adopt a non-linear and distributed representation approach to yield parametric models that can perform sequential operations on data that is fed into it. This ability has had profound implications in pattern recognition leading to various applications such as voice assistants, driver-less cars, facial recognition technology and image interpretation not to mention the development of the incredible AlphaGo, which has now evolved into AlphaGo zero. The potential of application of deep learning in medicine, where big data abounds, is vast. Some recent medical applications include EHR data mining and analysis, medical imaging interpretation (the most popular one being diabetic retinopathy diagnosis), medical voice assistants, and non-knowledge based clinical decision support systems. Better yet, the realisation of this potential is just beginning as newer deep learning algorithms are developed in the various Big-Tech and academic labs. Then what is the issue? Aside from the often cited 'interpretability/black-box' issue associated with neural networks (I have previously written even this may not be a big issue with solutions like attention mechanism, knowledge injection and knowledge distillation now being available) and it's limitations in dealing with hierarchal structures and global generalisation, there is the 'elephant in the room' i.e. no inherent representation of causality. In medical diagnosis, probability is important, but causality is more so. In fact the whole science of medical treatment is based on causality. You don't want to use doxycycline instead of doxorubicin to treat Hodgkin's Lymphoma or the vice versa for Lyme Disease. How do you know if the underlying is Hodgkin's Lymphoma or Lyme Disease? I know Different diseases and different presentations, of course. However, the point is the diagnosis is based on a combination of objective clinical examination, physical findings, sero-pathological tests, medical imaging. All premised on a causation sequence i.e. Borrelia leads to Lyme Disease and EB virus/family history leads to Hodgkin's. This is why we adopt Randomised Control Tests, even with its inherent faults, as the gold standard for incorporation of evidence-based treatment approaches. If understanding of causal mechanisms is the basis of clinical medicine and practice, then there is so much deep learning approaches can do for medicine. This is why I believe there needs to be a serious conversation amongst AI academics and the developer community about adoption of 'causal discovery algorithms' or better yet 'hybrid approaches (a combination of probabilistic and causal discovery approaches)'. We know 'correlation is not causation', yet under appropriate conditions we can make inferences from correlation to causation. We now have algorithms that search for causal structure information from data. They are represented by causal graphical models as illustrated below: Figure I. Acyclic Graph Models (Source: Malinsky and Danks, 2017) While the causal search models do not provide you a comprehensive causal list, they provide enough information for you to infer a diagnosis and treatment approach (in medicine). There have been numerous successes documented with this approach and different causal search algorithms developed over the past many decades. Professor Judea Pearl, one of the godfathers of causal inference approaches and an early proponent of AI has outlined structural causal models, a coherent mathematical basis for the analysis of causes and counterfactuals. You can read an introduction version here: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2836213/ I think it is probably a time now to pause and think, especially in the context of medicine, if a causal approach is better in some contexts than a deep learning gradient informed approach. Even better if we can think of a syncretic approach. Believe me, I am an avid proponent of the application of deep learning in medicine/healthcare. There are some contexts, where back-propagation is better than hypothetico-deductive inference especially when terrabytes/petabytes of unstructured data accumulates in the healthcare system. Also, there are limitations to causal approaches. Sometimes probabilistic approaches are not that bad as outlined by critics. You can read a defence of probabilistic approaches and how to overcome its limitations here: http://www.unofficialgoogledatascience.com/2017/01/causality-in-machine-learning.html However, close-mindedness to an alternative approach to probabilistic deep learning models and reliance on this approach is a sure-bet to failure of realisation of the full potential of AI in Medicine and even worse failure of the full adoption of AI in Medicine. To address this some critics of prevalent deep learning approaches are advocating for a Hybrid approach, whereby a combination of deep learning and causal approaches are utilised. I strongly believe there is merit in this recommendation, especially in the context of application of AI in medicine/healthcare. A development in this direction is the 'Causal Generative Neural Network'. This framework is trained using back-propagation but unlike previous approaches, capitalises on both conditional independences and distributional asymmetries to identify bivariate and multivariate causal structures. The model not only estimates a causal structure but also differential generative model of the data. I will be most certainly following the developments with this framework and more generally hybrid approaches especially in the context of it's application in medicine but I think all those interested in the application of AI in Medicine should be having a conversation about this matter. Reference: Malinsky, D. & Danks, D. (2017). Causal discovery algorithms: A practical guide. Philosophy Compass 13: e12470, John Wiley & Sons ltd. Earlier this year American researchers Xiao, Choi and Sun published a systematic review about the application of deep learning methods to extract and analyse EHR data in the prestigious JAMIA journal. The 4 categories of analytic tasks that they found deep learning was being used were 1) Disease Detection/Classification 2) Sequential prediction of clinical events 3) Concept embedding where feature representations are derived algorithmically from EHR 4) Data augmentation whereby realistic data elements or patient records are created and 5) EHR data privacy where deep learning was used to protect patient privacy. More importantly, they identified challenges in the application and corresponding solutions. I have summarised the issues and solutions in this table:

Why a third AI winter is unlikely to occur and what it means for health service delivery?23/10/2018 Around the late 1970's, the so called first 'AI winter' emerged because of technical limitations ( the failure of machine translation and connectionism to achieve their objectives). Then after in the late 1980's, the second AI winter occurred because of financial/economic reasons (DARPA cutbacks, restriction on new spending in academic environments and collapse of the LISP machine market). With the current re-emergence of AI and the increasing attention being paid to it by governments and corporates, some are speculating another AI winter may occur. Here is a lay commentary as to why this may occur: https://www.ft.com/content/47111fce-d0a4-11e8-9a3c-5d5eac8f1ab4 and here is a more technical commentary: https://blog.piekniewski.info/2018/05/28/ai-winter-is-well-on-its-way/

What these commentator's don't take into account is the conditions that prevailed in the late 70's and 80's are not the conditions prevalent now. The current demand for AI has emerged because of actual needs (the need to compute massive amounts of data). With the rapid rise in computational power, increase in acquisition of data from all quarters of life, increased investment by governments and corporates, dedicated research units both in academia and corporate bodies, integration with other innovative IT areas like IoT, Robotics, AR, VR, Brain-Machine Interfaces..etc there has never been a better time for AI to flourish. What sceptics seem to mistakenly assume is that developers are intending for Artificial General Intelligence to occur. Maybe there is a time when this is to occur but nearly all developers are focused on developing narrow AI applications . The rediscovery of the usefulness of early machine learning algorithms and introduction of newer forms of machine learning algorithms like convolutional neural networks, GANs, Deep Q networks..etc coupled with advances in understanding of symbolism, neurobiology, causation and correlational theories have advanced the progress of AI applications. If commentators stop expecting that AI systems will replace all human activities and understand that AI is best suited to augment/enhance/support human activity, there would be less pessimism about the prospects of AI. Of course, there will be failures as AI gets applied in various industries followed by the inevitable 'I told you so' by arm-chair commentators or sardonic academics but this will not stop the progress of AI and related systems. On the other hand, if AI researchers and developers can aim for realistic objectives and invite scrutiny of their completed applications, the waffle from the cynics can be put to rest. So where does this leave health services and the application of AI in healthcare? Traditionally the clinical workforce have been slow adopters of technological innovations. This is expected as errors and risks in clinical care unlike other industries are less tolerated and many times unacceptable. However, the greatest promise for AI is in its application in healthcare. For long, health systems across the world have been teetering on financial bankruptcy with governments or other entities bailing them out (I can't think of a health system, which runs on substantial profits) . A main component of the costs of running health services is recurrent costs, which includes recurrent administrative and workforce costs. Governments or policy wonks have never been able to come up with a solution to address this except reiterate the tired mantra of early prevention or advocate for deficit plugging or suggest new models of workforce, which are hard to implement. Coupled with this scenario, is the humongous growth in medical information that no ordinary clinician can retain and pass onto their patients or include in their treatment, rapid introduction of newer forms of drugs and treatments and the unfortunate increase in the number of medical errors (In the US, approximately 251,454 deaths are caused per annum due to medical errors). AI Technology, provides an appropriate solution to address these issues considering the potential of AI enabled clinical decision support systems, digital scribes, medical chatbots, electronic health records, medical image analysers, surgical robots, surveillance systems; all of which can be developed and delivered at economical pricing. Of course, a fully automated health service is many years away and AI regulatory and assessment frameworks are yet to be properly instituted. So we won't be realising the full potential of AI in healthcare in the immediate future. However, if health services and clinicians think AI is another fad like Betamax, Palm Pilots, Urine therapy, Bloodletting and lobotomies they are very mistaken. The number of successful instances of the application of AI in healthcare delivery is increasing at a rapid rate for it to be a flash in the pan. Also, many governments including the Chinese, UAE, UK and French governments have prioritised the application of AI in healthcare delivery and continue to invest in its growth. While AI technology will never replace human clinicians it will most certainly replace clinicians and health service providers who do not learn about it or engage with it. Therefore it is imperative for health service providers, medical professional bodies, medical schools and health departments to actively incorporate AI/technology (machine learning, robotics and expert systems) in their policies and strategies. If not, it will be a scenario of too little and too late depriving patients of the immense benefits of personalised and cost-efficient care that AI enabled health systems can deliver. The most common AI systems that are currently emerging are machine learning, natural language processing, expert systems, computer vision and robots. Machine Learning is when computer programs are designed to learn from data and improve with experience. Unlike conventional programming, machine learning algorithms are not explicitly coded and can interpret situations, answer questions and predict outcomes of actions based on previous cases. These processes typically run independently in the background and incorporate existing data, but also “learn” from the processing that they are doing. There are numerous machine learning algorithms, but they can be divided broadly into two categories: supervised and unsupervised. In supervised learning, algorithms are trained with labelled data, i.e. for every example in the training data there is an input object and output object and the learning algorithm discovers the predictive rule. In unsupervised learning, the algorithm is required to find patterns in the training data without necessarily being provided with such labels. A leading form of machine learning termed Deep Learning is considered particularly promising.

Natural Language Processing (NLP) is a set of technologies for human-like-processing of any natural language, oral or written, and includes both the interpretation and production of text, speech, dialogue etc. NLP techniques include symbolic, statistical and connectionist approaches and have been applied to machine translation, speech recognition, cross-language information retrieval, human-computer interactions and so forth. Some of these technologies, and certainly the effects, we see in normal societal IT products such as through email scanning for advertisements or auto-entry text for searches. ”Expert systems” is another established field of AI, in which the aim is to design systems that carry out significant tasks at the level of a human expert. Expert systems do not yet demonstrate general-purpose intelligence, but they have demonstrated equal and sometimes better reasoning and decision making in narrow domains compared to humans, while conducting these tasks similar to how a human would do so. To achieve this function, an expert system can be provided with a computer representation of knowledge about a particular topic and apply this to give advice to human users. This concept was pioneered in medicine the 1980s by MYCIN, a system used to diagnose infections and INTERNIST an early diagnosis package. Recent knowledge based expert systems combine more versatile and more rigorous engineering methods. These applications typically take a long time to develop and tend to have a narrow domain of expertise, although they are rapidly expanding. Outside of healthcare, these types of systems are often used for many other functions, such as trading stocks. Another area is Computer Vision systems, which capture images (still or moving) from a camera and transforms or extract meanings from them to support understanding and interpretation. . Replicating the power of human vision in a computer program is no easy task, but it attempts to do so by relying on a combination of mathematical methods, massive computing power to process real-world images and physical sensors. While great advances have been made with Computer Vision in such applications as face recognition, scene analysis, medical imaging and industrial inspection the ability to replicate the versatility of human visual processing remains elusive. The final technique we cover here are Robots, which have been defined as “physical agents that perform tasks by manipulating the physical world” for which they need a combination of sensors (to perceive the environment) and effectors (to achieve physical effects in the environment). Many organisations have had increasing success in limited Robots which can be fixed or mobile. Mobile “autonomous” robots that use machine learning to extract sensor and motion models from data and which can make decisions on their own without relying on an operator are most relevant to this commentary, of which “self-driving” cars are well known examples. To borrow Professor Dan Ariely's quote about Big Data, AI is like like teenage sex: everyone talks about it, nobody really knows how to do it, everyone thinks everyone else is doing it, so everyone claims they are doing it.

However, this may be a too harsh commentary about the state of AI affairs. Not withstanding the overstatements about the capabilities AI by some; there is substance behind the hype with the demonstrated benefits of deep learning, natural language processing and robotics in various aspects of our lives. In Medical Informatics, the area I can credibly comment, neural networks and data mining have been employed to enhance the ability of human clinicians to diagnose and predict medical conditions, and in some instances like medical imaging and histo-pathological diagnosis, AI applications have met or exceeded the accuracy of human clinicians. In terms of economics, AI deserves the attention it is getting. The top 100 AI companies have raised more than US$11.7 billion in revenue and even back in 2015, about 49 billion US$ revenue was generated just in the North American Market. Countries like China have invested massively in AI research and start-ups, with some forecasting the Chinese AI industry exceeding US$150 billion by 2030. In other instances like the political sphere, AI has received high profile recognition with the appointment of the first AI Minister in the world in U.A.E. However, one has to be aware of the limitations with AI and the amount of research and analysis that is yet to be undertaken for us to confidently accept a ubiquitous AI system our lives. I state this as a vocal proponent of application of AI techniques especially in Medicine (as my earlier articles and book chapter indicate) but also as one who is aware of the 'AI Winter' and 'IBM Watson/MD Anderson' episodes that have occurred in the past. Plus there is the incident that happened yesterday where a driverless Uber car was responsible for a fatality in Arizona. So in this article I list, from a healthcare perspective, three main limitations in terms of adoptability of AI technologies. This analysis is based on current circumstances, which considering the rapid developments that are occurring in AI research may not apply going into the future. 1) Machine Learning Limitations: The three main limitations I see with machine learning are Data-feed, Model Complexity and Computing Times. In machine learning the iterative aspect of learning is important. Good machine learning models rely on data preparation and ongoing availability of good data. If you don't have good data to train the machine learning model (in supervised learning) and there is no new data, the pattern recognition ability of the model is moot. For example in the case of radiology, if the images being fed into the deep learning algorithms tend to come with underlying biases (like images from a particular ethnic group or images from a particular region) the diagnostic abilities and accuracy rates of the model would be limited. Also, reliance on historical data to train algorithms may not be particularly useful for forecasting novel instances of drug side-effects or treatment resistance. Further, cleaning up and capturing data that are necessary for these models to function will provide a logistical challenge. Think of the efforts required to digitize handwritten patient records. With regards to model complexity, it will be pertinent to describe deep learning (a form of machine learning) here. Deep Learning in essence is a mathematical model where software programs learn to classify patterns using neural networks. For this learning, one of the methods used is backpropagation or backprop that adjusts mathematical weights between nodes-so that an input leads to right outputs. By scaling up the layers and adding more data, deep learning algorithms are to solve complex problems. The idea is to match the cognition processes a human brain employs. However, in reality, pattern recognition alone can't resolve all problems, especially so all medical problems. For example, when a decision has to be made in consultation with the family to take off mechanical ventilation for a comatose patient with inoperable intra-cerebral hemorrhage. The decision making in this instance is beyond the capability of a deep learning based program. The third limitation with machine learning is the current capabilities of computational resources. With the current resources like GPU cycles and RAM configurations, there are limitations as to how much you can bring down the training errors to reasonable upper bounds. This means the limitation impacts on the accuracy of model predictions. This has been particularly pertinent with medical prediction and diagnostic applications, where matching the accuracy of human clinicians in some medical fields has been challenging. However, with the emergence of quantum computing and predicted developments in this area, some of these limitations will be overcome. 2) Ethico-legal Challenges: The ethico-legal challenges can be summarized as 'Explainability', 'Responsibility' and 'Empathy'. A particular anxiety about artificial intelligence is that decisions made by complex opaque algorithms cannot be explained even by the designers (the black box issue). This becomes critical in the medical field, where decisions either made directly or indirectly through artificial intelligence applications can impact on patient lives. If an intelligent agent responsible for monitoring a critical patient incorrectly interprets a medical event and administers a wrong drug dosage, it will be important for stakeholders to understand what led to the decision. However, if the underlying complexity of the neural network means the decision making path cannot be understood; it does present a serious problem. The challenge in explaining opaque algorithms is termed as the interpretability problem. Therefore, it is important for explainable AI or transparent AI applications to be employed for medical purposes. The medical algorithm should be fully auditable when and where (real-time and after the fact) required. To ensure acceptability of AI applications in the healthcare system, researchers /developers need to work on accountable and transparent mathematical structures when devising AI applications. When a robotic radical prostatectomy goes wrong or when a small cell pulmonary tumor is missed in an automated radiology services, who becomes responsible for the error? The developer? The hospital? The regulatory authority(which approved the use of the device or program)? As AI applications get incorporated in medical decision making and interventions, regulatory and legal bodies need to work with AI providers to set up appropriate regulatory and legal frameworks to guide deployment and accountability. Also, a thorough process of evaluation of new AI medical applications ,before they can be used in practice, will be required to be established especially if autonomous operation is the goal. Further, authorities should work with clinical bodies to establish clinical guidelines/protocols to govern application of AI programs in medical interventions. One of the important facets of medical care is the patient-clinician interaction/interface. Even with current advances in Robotics and intelligent agent programming, the human empathetic capabilities far exceed that those of AI applications. AI is dependent on a statistically sound logic process that intends to minimize or eliminate errors. This can be termed by some as cold or cut-and-dry unlike the variable emotions and risk taking approach humans employ. In medical care, clinicians need to adopt a certain level of connection and trust with the patients they are treating. It is hard to foresee in the near future, AI driven applications/robots replacing humans in this aspect. However, researchers are already working on classifying and coding emotions (see: https://www2.ucsc.edu/dreams/Coding/emotions.html) and robots are being developed with eerily realistic facial expressions (http://www.hansonrobotics.com/robot/sophia/), so maybe the cynicism about AI's usefulness in this area is not all that deserved? 3) Acceptability and Adoptability: While I think the current technological limitations with machine learning and robotics can be addressed in the near future, it will be a harder challenge for AI providers to convince the general public to accept autonomous AI applications, especially those that make decisions impacting on their lives. AI to an extent is pervasive already with availability of voice-driven personal assistants, chatbots, driverless cars, learning home devices, predictive streaming..etc. There hasn't been a problem for us in accepting these applications in our lives. However, when you have AI agents replacing critical positions that were previously held by humans ; it can be confronting especially so in medical care. There is thus a challenge for AI developers and companies to ease the anxiousness of public in accepting autonomous AI systems. Here, I think, pushing explainable or transparent applications can make it easier for the public to accept AI agents. The other challenge, from a medical perspective, is adoption of AI applications by clinicians and healthcare organizations. I don't think the concern for clinicians is that AI agents will replace them but the issues are rather the limited understanding clinicians have about AI techniques (what goes behind the development), apprehensiveness about the accuracy of these applications especially in a litigious environment and skepticism as to whether the technologies can alleviate clinician's stretched schedules. For healthcare organizations, the concerns are whether there are cost efficiencies and cost-benefits with investment into AI technologies, whether their workforce will adopt the technology and how clients of their services will perceive their adoption of AI technologies. To overcome these challenges, AI developers need to co-design algorithms with clinicians and proactively undertake clinical trials to test the efficacy of their applications. AI companies and healthcare organizations also need to have a education and marketing strategy to inform public/patients about the benefits in adopting AI technologies. Don't rule out AI I outline the above concerns largely to respond to the misconceptions and overhyping of AI by media and those who are not completely conversant with the mechanics behind AI applications. Overhyping AI affects the acceptability of AI especially if it leads to adoption of immature or untested AI technologies. However, there is much to lose healthcare organizations rule out adoption of AI technologies. AI technologies can be of immense help in healthcare delivery:

Why healthcare systems should adopt A.I. in healthcare delivery? (Part 1-Economic Benefits)18/2/2018 (This article was first posted on LinkedIn)

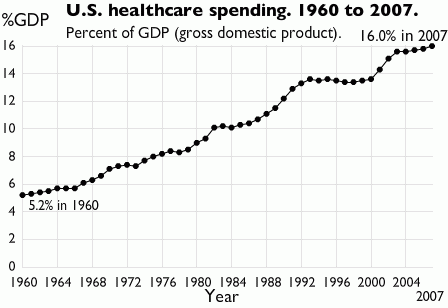

Keeping aside the buzz and hype about utilization of A.I.* in various disciplines and what mistakenly many assume it can accomplish, there are real merits in governments and decision-makers setting out strategies for adoption of A.I. in health service delivery. In this article, I will review the economic benefits of the application and in later articles, the other advantages. Of those health systems analyzed by the Commonwealth Fund in their performance rankings, people in the U.S. and Australia had the highest out-of-pocket costs when accessing healthcare. This issue has arisen not for the lack of investment in healthcare by governments in these countries. In 2016, the healthcare spending in the U.S. increased by 4.3 percent to attain a figure of US$3.3 trillion or about US$10,348 per person. Of the US$3.3 trillion, US$1.1 trillion was spent on hospital care, US$92 billion on allied health services and about US$162.5 billion on nursing care facilities and retirement communities. Together these expenditures constituted approximately 45% of the 2016 health spend. Of the total health expenditure, individuals/households contribution matched the government expenditure (28% of the total health expenditure). In Australia in 2015-16, the total healthcare spend was AU$170.4 billion (a AU$6 billion increase compared to 2014-15). Of this, the government expenditure on public hospital services was AU$46.9 billion and on primary healthcare AU$34.6 billion. Expenditure by individuals accounted for 52.7% of non-government expenditure or 17.3% of total health expenditure. While governments continue to increase spending this hasn't really made a serious dent on out-of-pocket costs. One of the key component of annual healthcare spending and pertinent to this article is the recurrent healthcare expenditure. Recurrent healthcare expenditure does not involve acquisition of fixed assets and expenditure on capital but largely expenditure on wages, salaries and supplements. In Australia, recurrent healthcare expenditure constitutes a whopping 94% of the total expenditure and in the US, about US$664.9 billion was spent on physician and clinical wages in 2016. Considering it is unlikely for wages to go down; it is hard to imagine recurrent healthcare expenditure decreasing and consequently total healthcare spend decreasing . A.I. technology, which has been around for decades but has only recently received wide-spread attention, is increasingly being applied in various aspects of healthcare (primarily in the U.S.). While in an earlier article, I have argued how A.I. can never totally replace human clinicians**, many number of American hospitals are using A.I. technology to leverage their consultants expertise where in some cases the A.I applications are outperforming them. I won't discuss the technologies and application here (as it will be covered in my book chapter^ and in subsequent articles) but I will discuss the costs of development of these technologies from a healthcare point of view. With traditional software development, the usual phases include discovery and analysis phase, prototype implementation and evaluation phase, minimum viable product and followed by product release. The costs associated with these phases, depending on the project sizes and complexity of the software, can constitute anywhere from US$10,000 to US$100,000^^. However, development of AI programs (here I will consider Machine Learning based programs not robotic applications, which adopt a different development model and consequently different cost models) have distinctive features to be considered in their development. These aspects include acquisition of large data sets to train the system and fine-tuning the algorithms that analyze the data. Where significant data sets cannot be obtained, data augmentation can be considered. Costs will be incurred in acquiring the data sets if not available in prior. However, in the context of healthcare government agencies and hospitals can provision this data for developers at no costs (if the A.I. program is being developed/customized for their exclusive use). So the most cost impacting factor is whether the data is structured or not. Data doesn't have to be structured, there are several machine learning algorithms that are trained to analyze unstructured data. However, developing programs to review unstructured data incurs more costs. Even when structured data is available, there are processes like data cleansing and data type conversion, which add to the costs. The next distinctive feature is fine-tuning/customizing the machine learning algorithm to suit organization's requirements. As the healthcare context requires the program to have a high degree of accuracy (less false negatives and high true positive identification epidemiological speaking), many round of refinements of the algorithm will be required. Even considering these distinctive features, which will add to the baseline costs ranging from US$50,000 to US$300,000^^; you are looking at a range of total costs of US$60,000 to US$500,000^^ (depending on the organisation requirements and complexity of the A.I. software). If we consider in the U.S. about US$664 billion (2016) and in Australia that AU$64 billion (2015-2016) was spent on hospital recurrent expenditure alone, a mere 0.016% allocation of the spend on developing A.I. technologies could fund development of at least 18 (hospital focused advanced machine learning based) applications per annum. The ROI is not just economic but also improvement in patient outcomes because of avoidance of medical errors, improved medical/laboratory/radiological diagnosis and predictability of chronic disease outcomes. Considering the rapid advances machine learning based program have made in medical prediction, diagnosis and prognosis^ , governments and healthcare organizations should seriously consider focus on supporting the development and deployment of A.I.technologies not only for the serious dent these applications can make on recurrent health expenditure but also how they can significantly improve patient access. Footnotes * I have a distaste for the term 'A.I.' which I have explained the reasons for in an earlier article but use this term as it is widely recognizable and accepted to portray computational intelligence based products. **https://www.linkedin.com/pulse/role-human-clinicians-automated-health-system-dr-sandeep-reddy/ ^ 'Use of Artificial Intelligence in Healthcare' (book working title: E-Health, ISBN 978-953-51-6136-3; Editor: Thomas.F.Heston, MD) ^^ This estimation does not take into consideration deployment, insurance and marketing costs. Eric Topol, a Professor of Genomics and Cardiologist, in his 2015 NYTimes bestseller 'The Patient Will See You Now', foresees the demise of the current form of hospital based acute care delivery (with a shift to delivery of care at homes of patients) along with replacement of human delivered clinical services by smart systems/devices in the coming future. I would not go so far as Professor Topol in his assessment about the replacement of human clinicians but can easily foresee the automation of a large part of human led clinical care in the coming decades.

While I do not remotely purport to be an expert on automated medical systems (that will take some more time and neurons); having undertook months of research to complete a book chapter on the use of Artificial Intelligence in healthcare (book working title: E-Health, ISBN 978-953-51-6136-3; Editor: Thomas.F.Heston, MD), just completed a hands-on AI programming course delivered by Microsoft while undertaking a scoping review of the use of recurrent neural networks in medical diagnosis and preparing the use case of a non-knowledge based clinical decision support system, I can rationally state where the automation of healthcare delivery is heading to. Before I analyse the 'artificial intelligence take-over of medical care' scenario further, I would like to here express my slight distaste for the term 'Artificial Intelligence'. This term derives from the incorrect assumption intelligence has been primarily a biological construct so far and any intelligence that is now being derived outside the biological domain is artificial. In other words, intelligence is framed exclusively in reference to biologically derived intelligence. However, intelligence is a profound entity of it's own with defined characteristics such as learning and reasoning. Human intelligence is not the most intelligence can be and as trends go, computing programs with their increasingly advanced algorithms can potentially in the next decade or so exceed the learning and reasoning powers of humans in some aspects. Therefore, a source based terminology for intelligence would be more appropriate to frame intelligence. In other words, computational intelligence would be more appropriate than artificial intelligence. Healthcare has been a fertile domain for computational intelligence (CI) researchers to apply CI techniques including artificial neural networks, evolutionary computing, expert systems and natural language processing. The rise in interest and investment in CI research has coincided with the increasing release of CI driven clinical applications. Many of these applications have automated the three key cornerstones of medical care: diagnosis, prognosis and therapy. So it is not hard to see why commentators, including clinical commentators, are predicting the replacement of human clinicians by CI systems. While it is indeed rational, based on current trends in CI research, to imagine automation of many human clinician (for convenience sake, I am focusing on physicians rather than other professions such as nurses, allied health professionals) led tasks including interpretation of laboratory and imaging results (CI applications are already matching the radiologist's accuracy in interpretation of MRI, CT and Radiological images), predicting clinical outcomes (CI applications have successfully predicted acute conditions by reviewing both structured and unstructured patient data) and diagnosing various acute conditions (in fact the earliest CI applications, dating back to the 70's, already had this ability); it is hard to imagine CI systems completely replacing human clinicians in conveying diagnosis and discussing complex treatment regimens with patients, especially with high risk patients. There are some other areas where it is equally hard to foresee automation of clinical tasks such as some complex procedures and making final treatment decisions. So what is to come? I foresee a co-habitation model. A model that accepts the inevitable automation of a significant number of tasks that are currently performed by human clinicians (the at-risk areas are where there is less human interaction and where there is a structured process in implementing the task; structure means algorithms can be developed easier you see) but allows for human clinicians to make the final decision and be the lead communicator with patients. Bibliography E. Topol, The Patient Will See You Now: The Future of Medicine is in Your Hands. New York: Basic Books, 2015. J. N. Kok, E. J. W. Boers, W. A. Kosters, P. Van Der Putten, and M. Poel, “Artificial Intelligence: Definition, Trends, Techniques, and Cases,” 2013. D. L. Poole and A. K. Mackworth, Artificial Intelligence: Foundations of Computational Agents, 2nd Edition. Cambridge University Press, 2017. B. Milovic and M. Milovic, “Prediction and Decision Making in Health Care using Data Mining,” Int. J. Public Heal. Sci., vol. 1, no. 2, pp. 69–76, 2012. K. L. Priddy and P. E. Keller, Artificial Neural Networks: An Introduction. Bellingham: SPIE Press, 2005. S. C. Shapiro, Encyclopedia Of Artificial Intelligence, 2nd Editio. New York: Wiley-Interscience, 1992. R. Scott, “Artificial intelligence: its use in medical diagnosis.,” J. Nucl. Med., vol. 34, no. 3, pp. 510–4, 1993. |

AuthorHealth System Academic Archives

December 2023

Categories |

RSS Feed

RSS Feed